How to audit a website’s sitemap to identify and fix crawling and indexing issues

One of the hardest things as an SEO is identifying the root cause of poor organic performance.

Often, it is a lengthy process of elimination.

In many cases, technical SEO is making sure a website’s foundations are correct and having a sitemap set up correctly can increase Google’s trust of hints they find on a website.

Many SEOs disregard the importance of a clean sitemap. While a clean sitemap won’t result in instant and measurable rankings, a messy sitemap with 3XX and 4XX URLs will certainly not help.

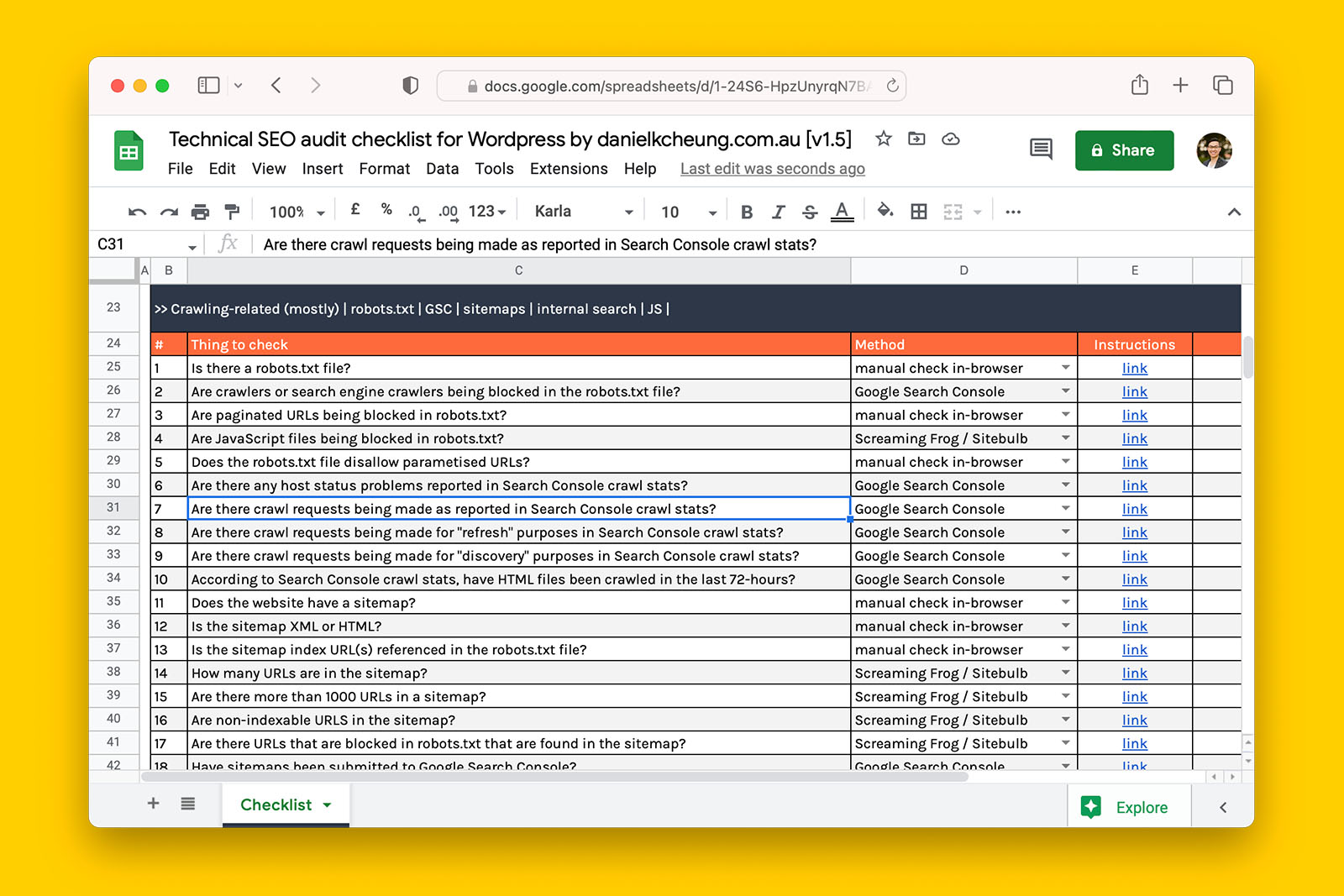

This post is an extension of ‘Downloadable technical SEO audit checklist template for WordPress and WooCommerce websites’. If you haven’t already, make a copy of the checklist before following this guide.

Let’s get started!

Does the website have a sitemap?

How to find if a website has a sitemap:

- Append “sitemap.xml” or “sitemap_index.xml” to the root domain (e.g., www.danielkcheung.com/sitemap.xml)

- Check if robots.txt references the sitemap

- Look in the primary and footer navigation menus for a HTML sitemap

- Check in Google Search Console.

Possible answers:

- yes

- no

What this means:

> If ‘yes’, a sitemap exists. This is a good start but warrants further investigation.

>> If ‘no’, consider creating one or more sitemaps and submitting them to Search Console and Bing Webmaster.

Recommended reading:

Is the sitemap XML or HTML?

How to work out if a website’s sitemap is HTML or XML?

- Does the sitemap end in “.XML”?

- If it does, check ‘yes’ in the spreadsheet

- If it does not end in “.XML” and follows a subfolder path such as “domain/sitemap”, check ‘no’ in the spreadsheet.

- Does the sitemap end in “.GZ” extension?

- If it does, check ‘yes’ in the spreadsheet as this is a compressed XML sitemap

- If not, it is not a compressed XML sitemap.

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, an XML sitemap exists.

>> If ‘no’, the website use a HTML sitemap. This will make it more difficult for you to analyse as crawlers such as Screaming Frog and Sitebulb cannot crawl HTML sitemaps.

>>> If ‘not applicable’, no HTML or XML sitemap exists. If the website has more than 500 indexable URLs, consider creating a sitemap to improve Google’s ability to crawl all internal URLs.

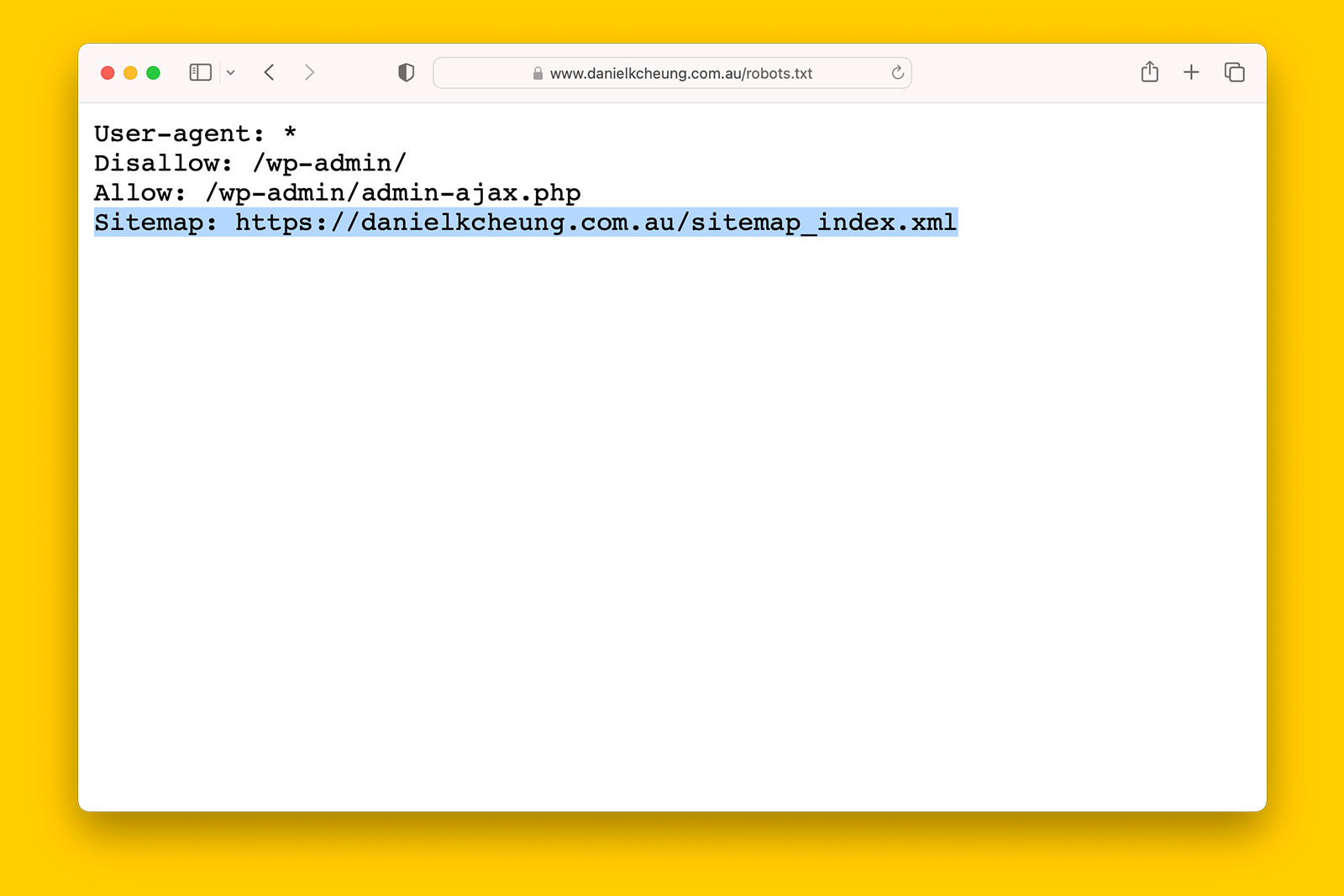

Is the sitemap index URL(s) referenced in the robots.txt file?

How to check if robots.txt mentions the sitemap address:

- Open the robots.txt file in your browser

- Search for “sitemap” and see if a full URL has been provided

- If you see this, check ‘yes’ in the spreadsheet

- If you do not find “sitemap” in the robots.txt, check ‘no’ in the spreadsheet

- If there is no robots.txt file, check ‘not applicable’ in the spreadsheet

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, the sitemap is referenced in robots.txt and crawlers such as Googlebot can easily find and crawl it.

>> If ‘no’, the sitemap is not referenced in robots.txt. As long as the sitemap has been submitted to Google Search Console, this is not a red flag. If no sitemap has been submitted to GSC, this should be something you address ASAP if crawling and indexing is an issue.

How many URLs are in the sitemap?

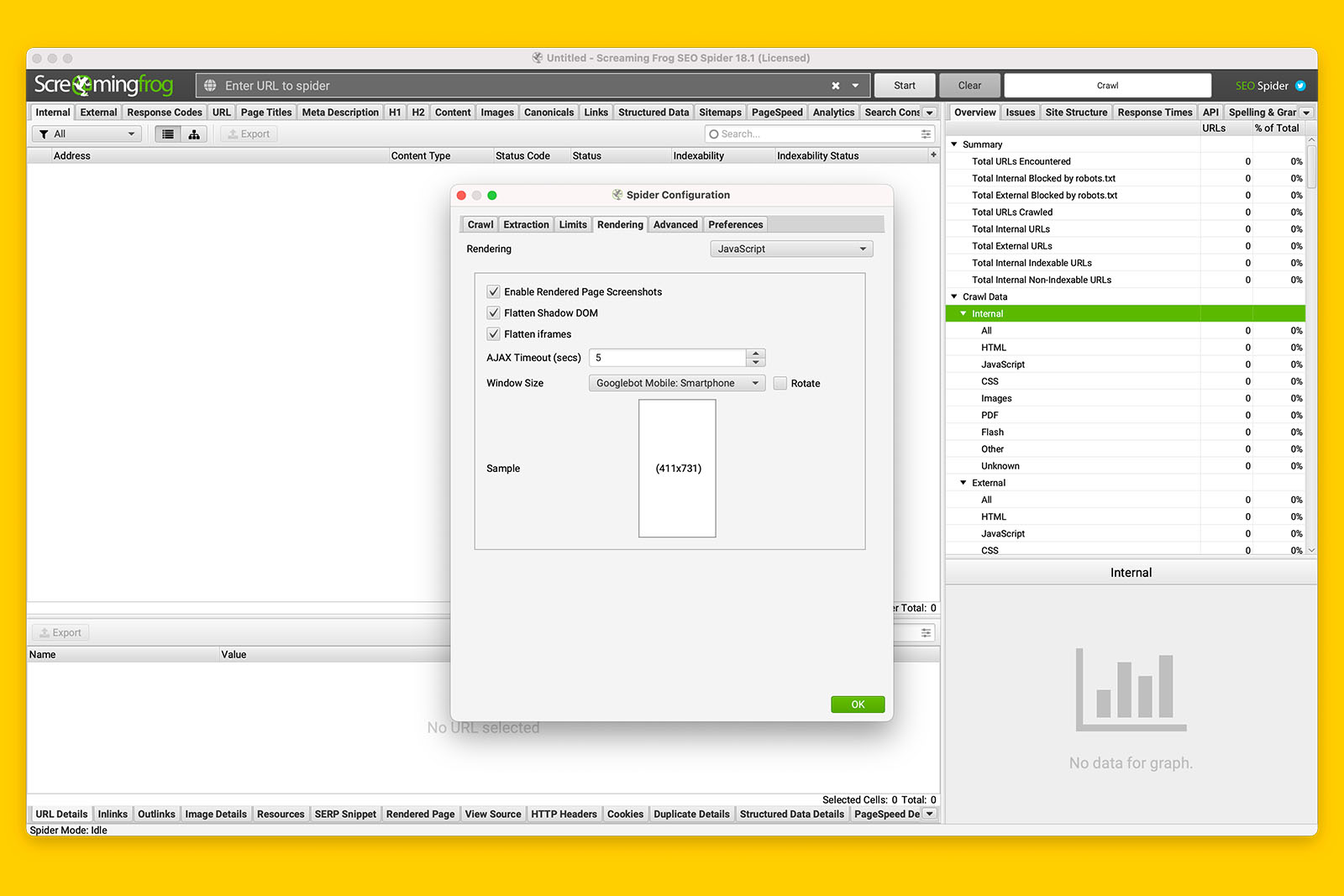

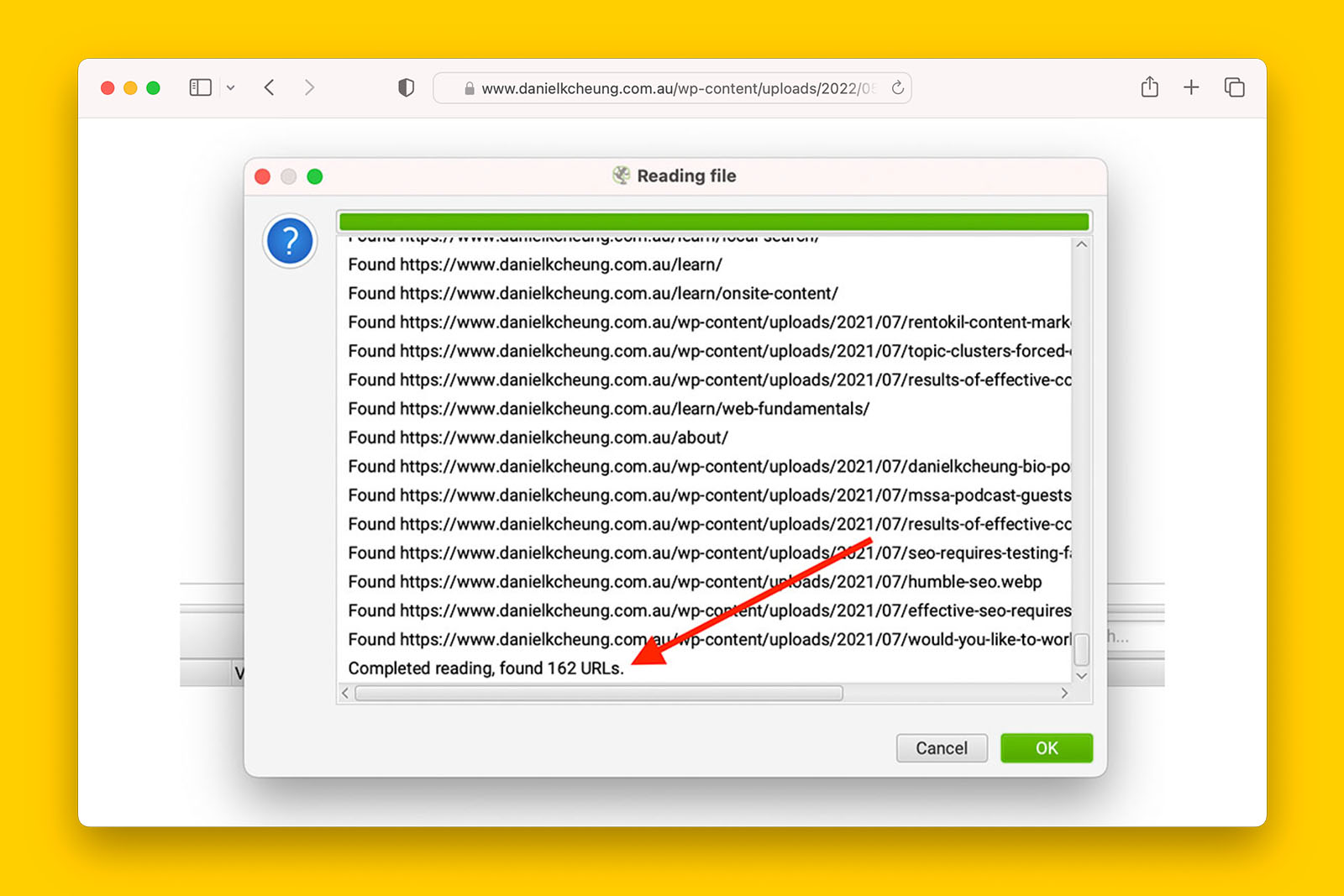

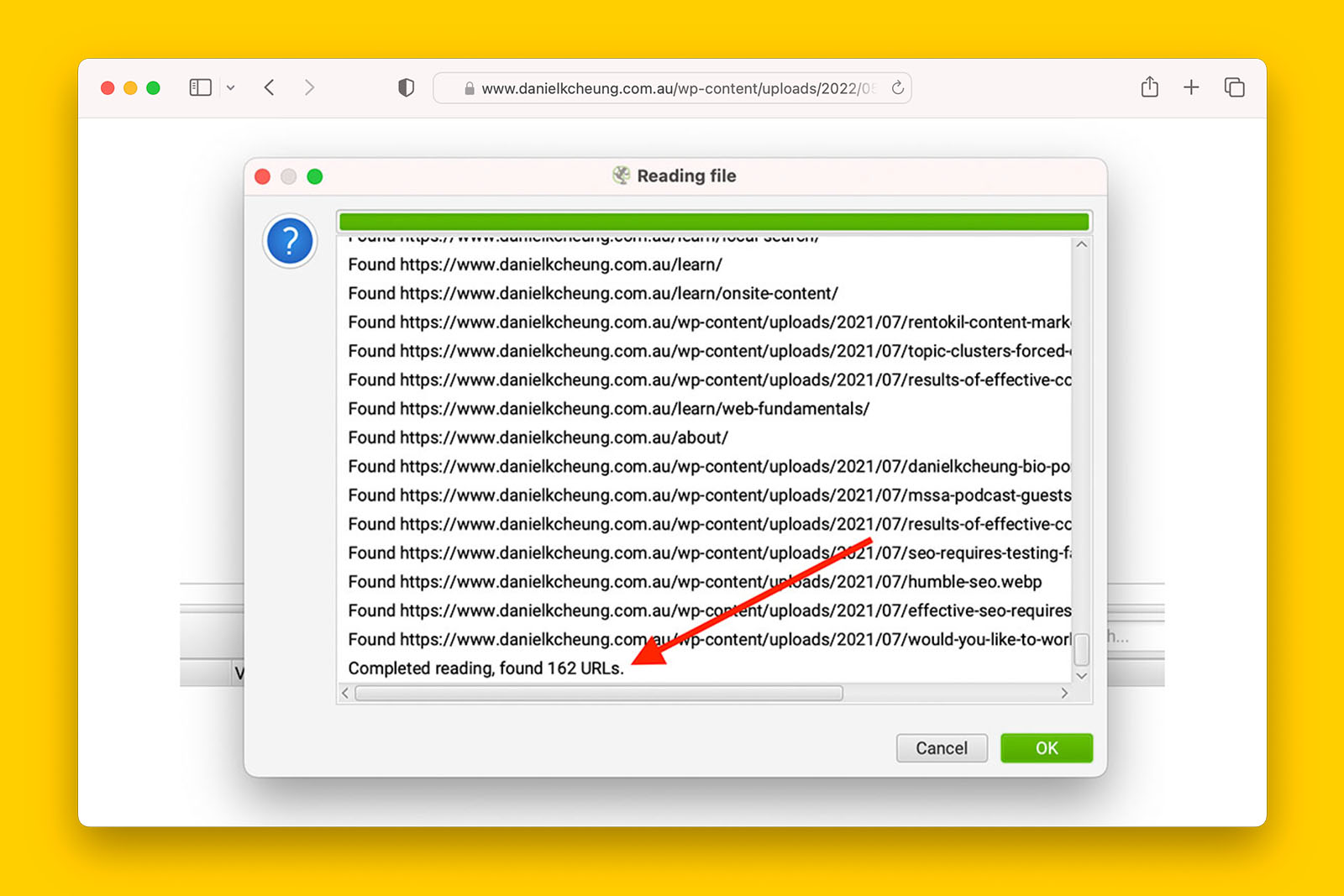

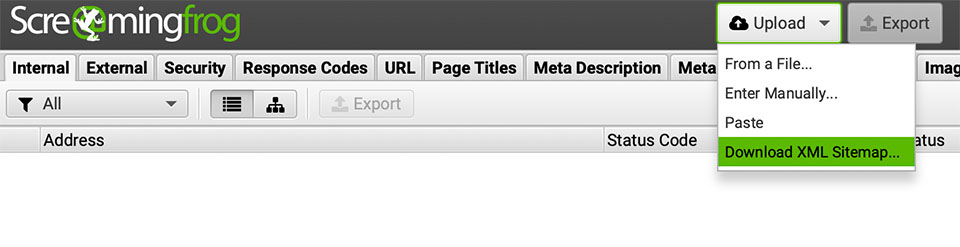

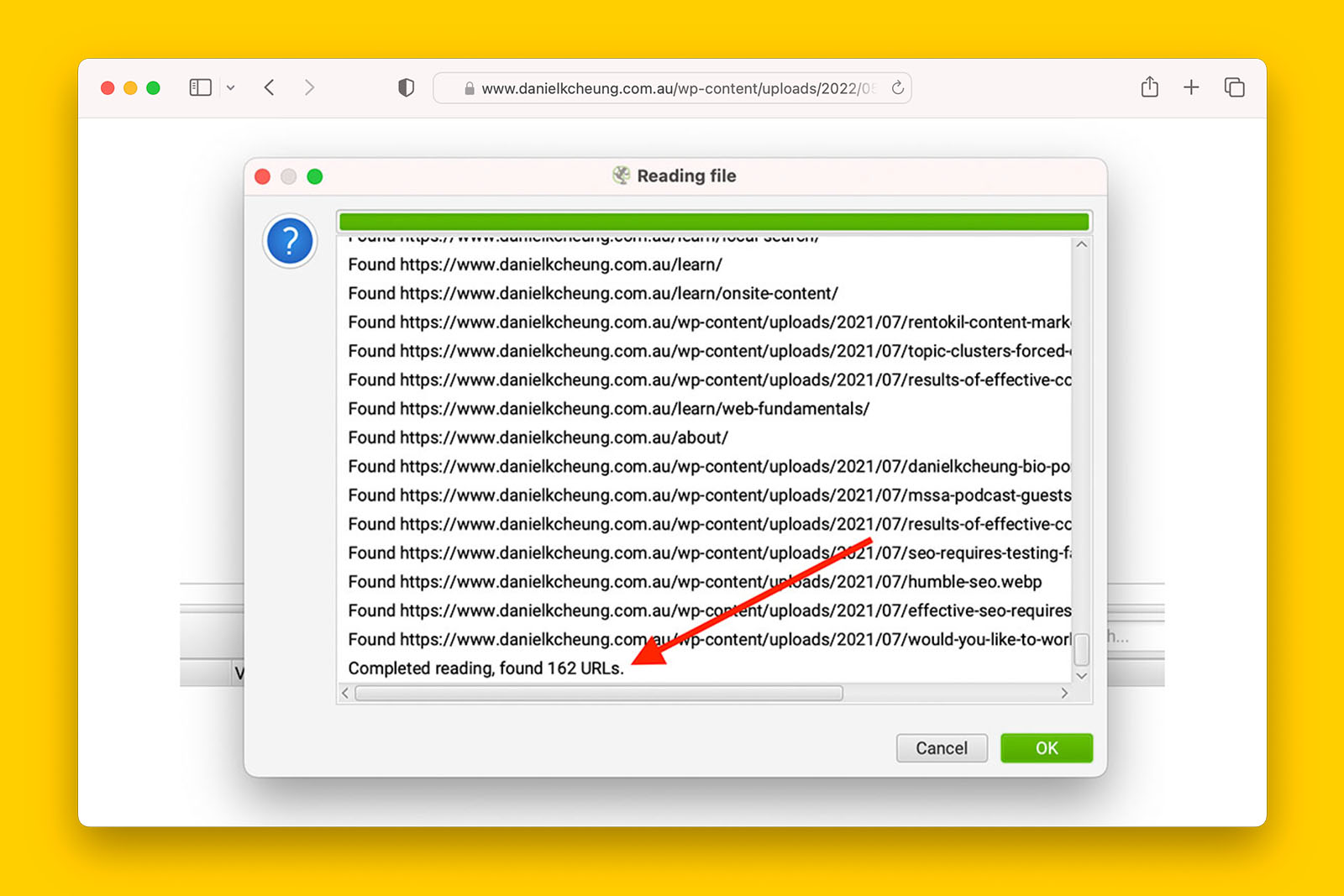

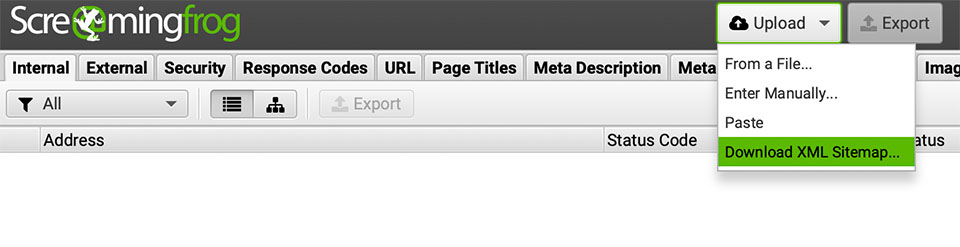

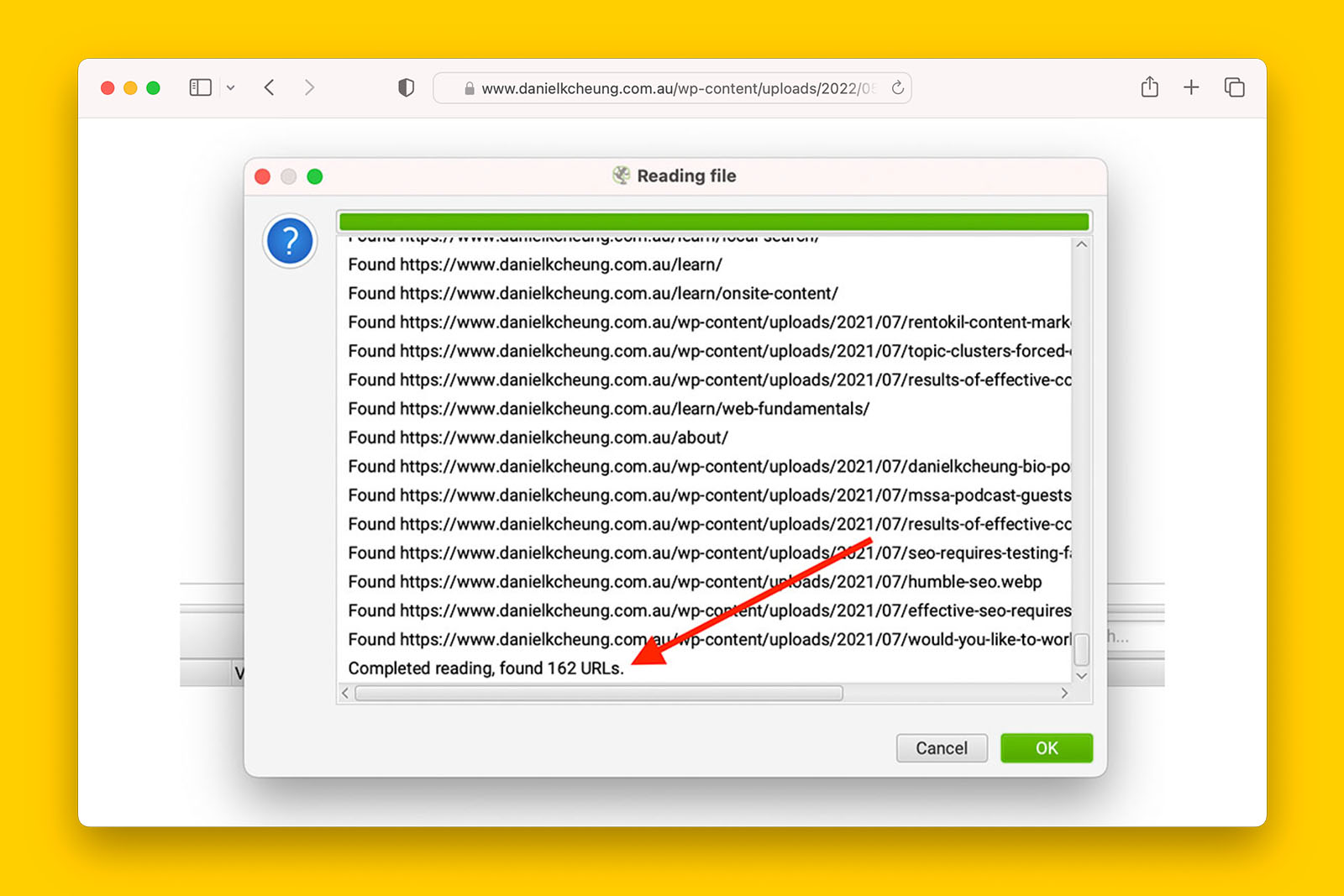

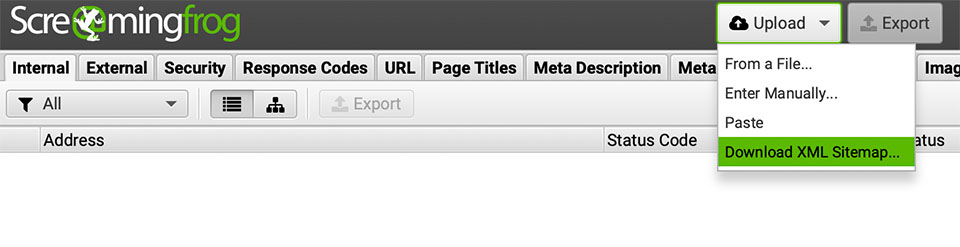

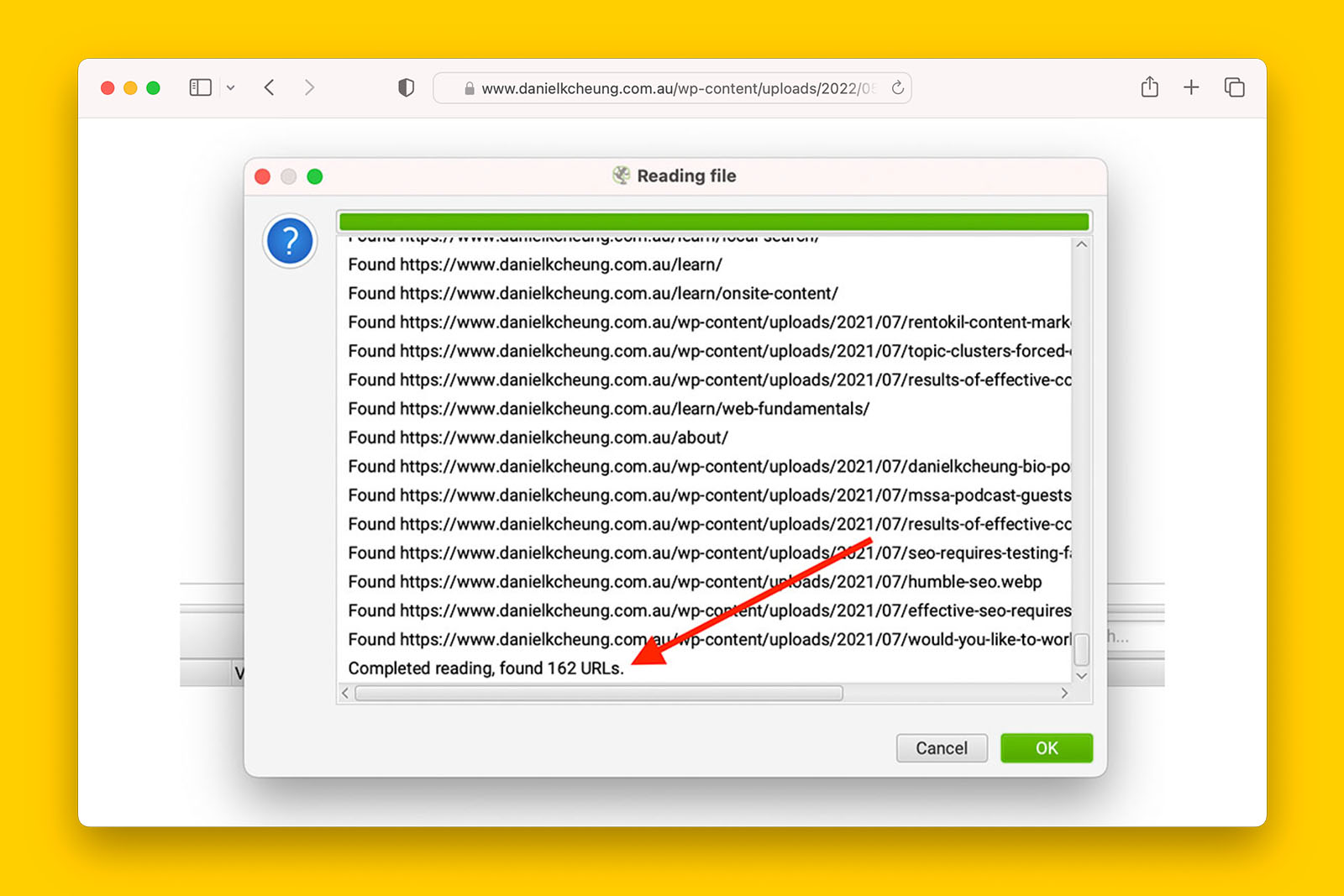

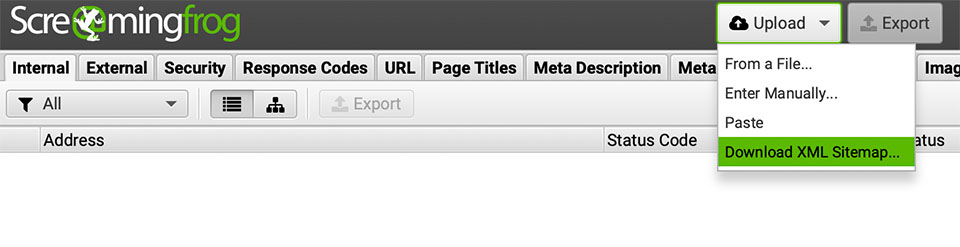

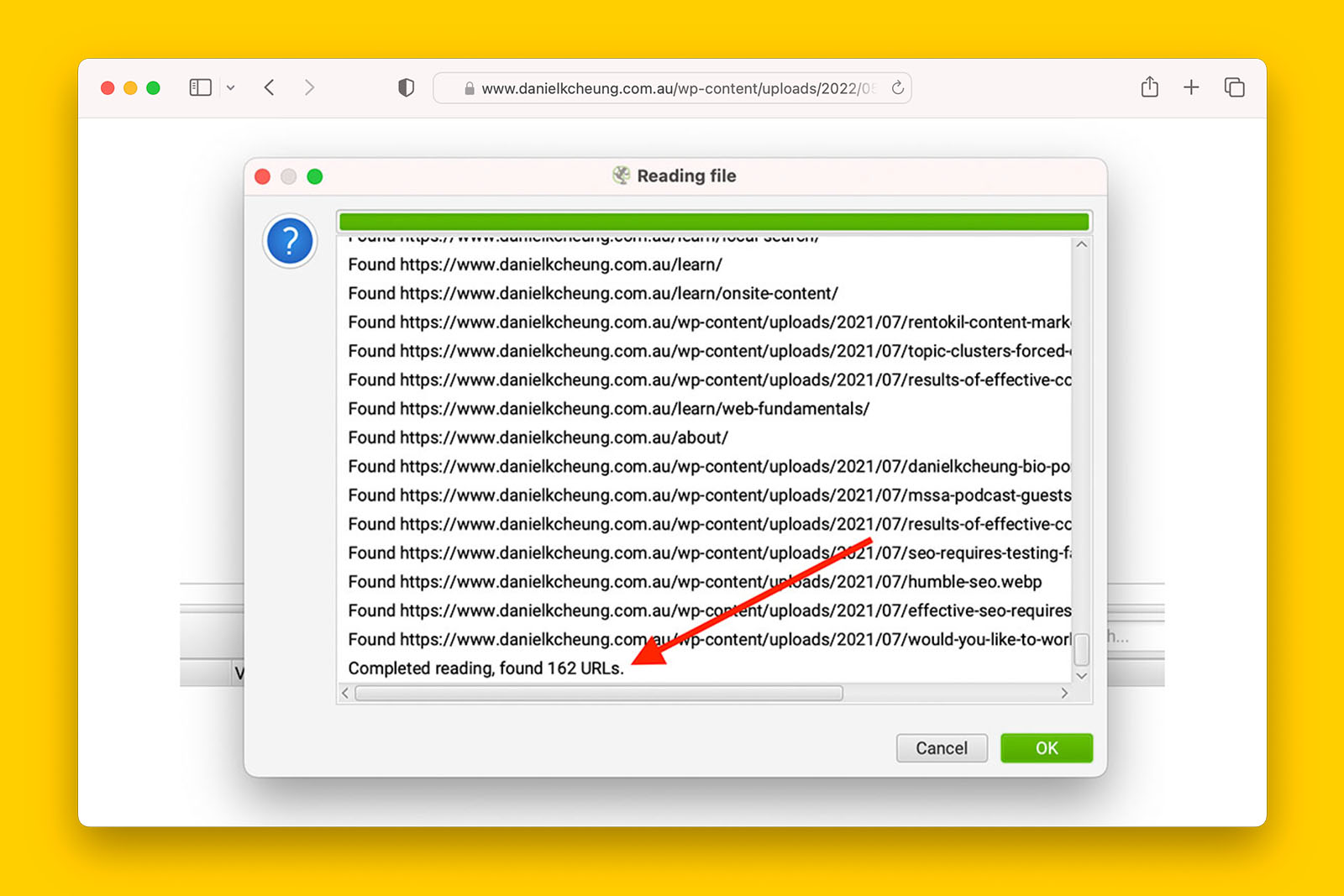

How to find out how many URLs are in a sitemap using Screaming Frog:

- Change mode from Spider to List

- Click on UPLOAD button and select DOWNLOAD FROM URL

- Copy and paste the full URL of the XML sitemap

- Click OK

- Screaming Frog will analyse the sitemap and report the number of URLs found in the XML file.

Are there more than 1000 URLs in a sitemap?

Refer to the previous criterion to find out how many URLs are contained in a sitemap.

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, consider splitting large sitemaps into smaller ones if you are experiencing crawling and indexing issues.

>> If ‘no’, the website is relatively small and probably does not warrant more than one sitemap.

Recommended reading:

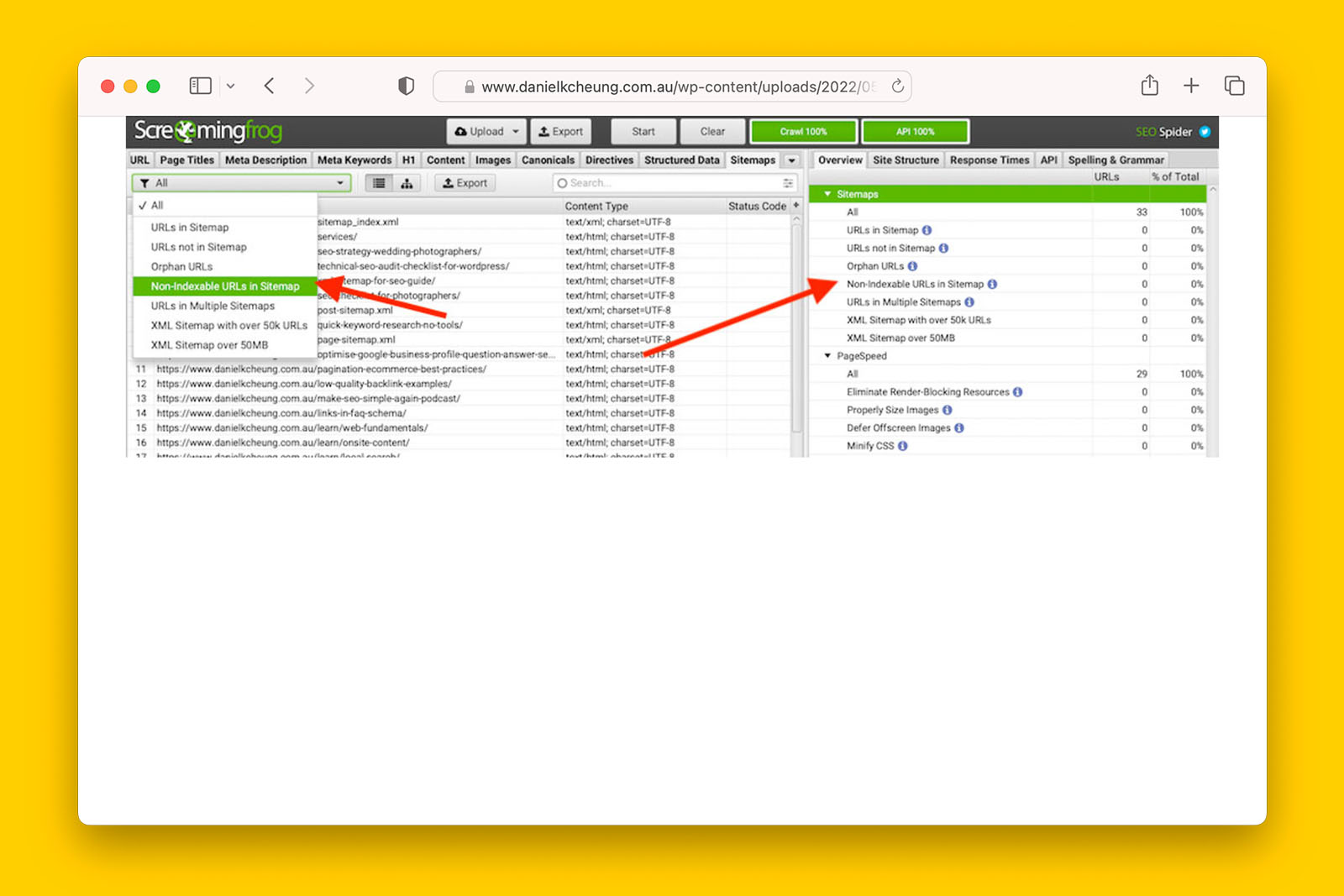

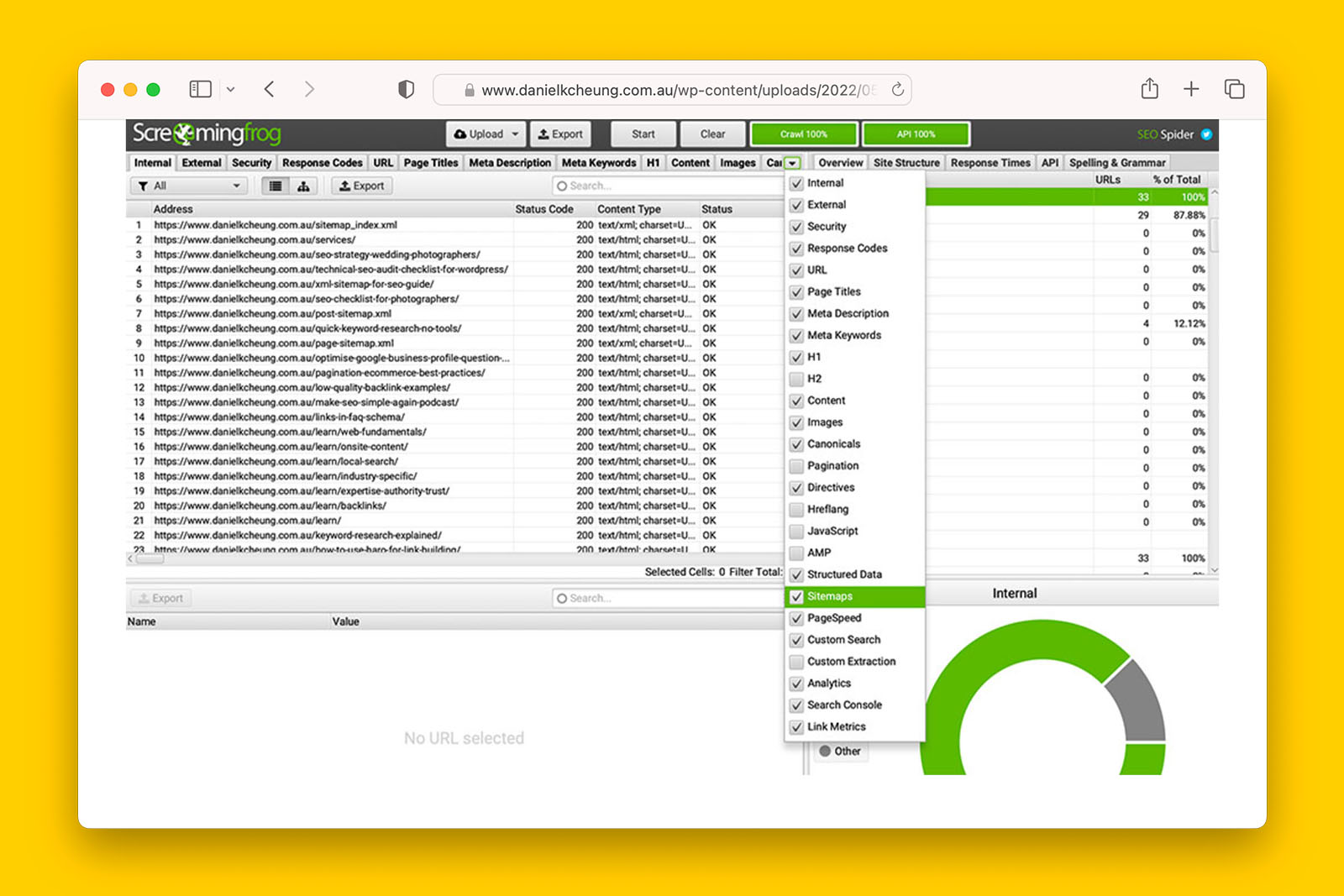

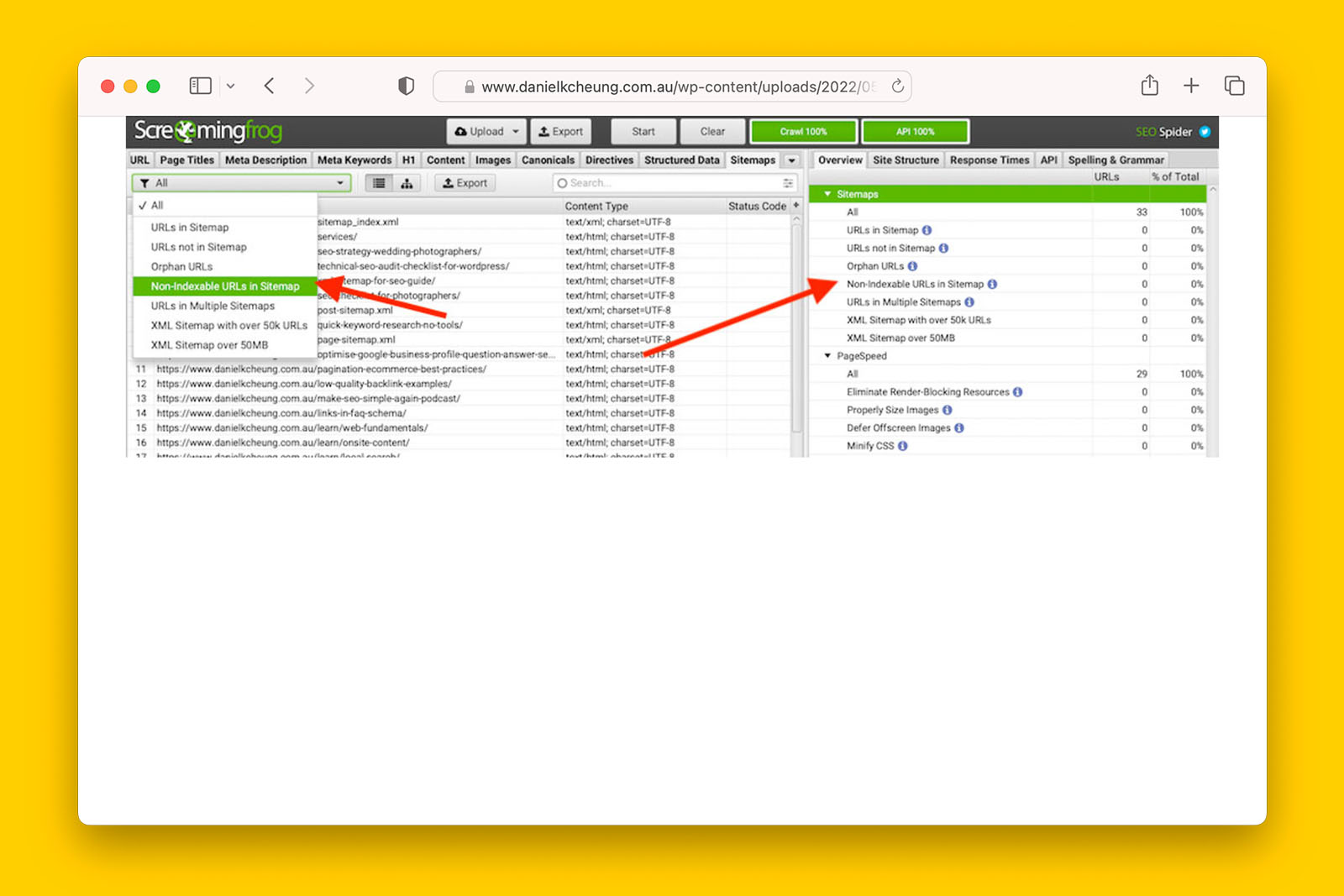

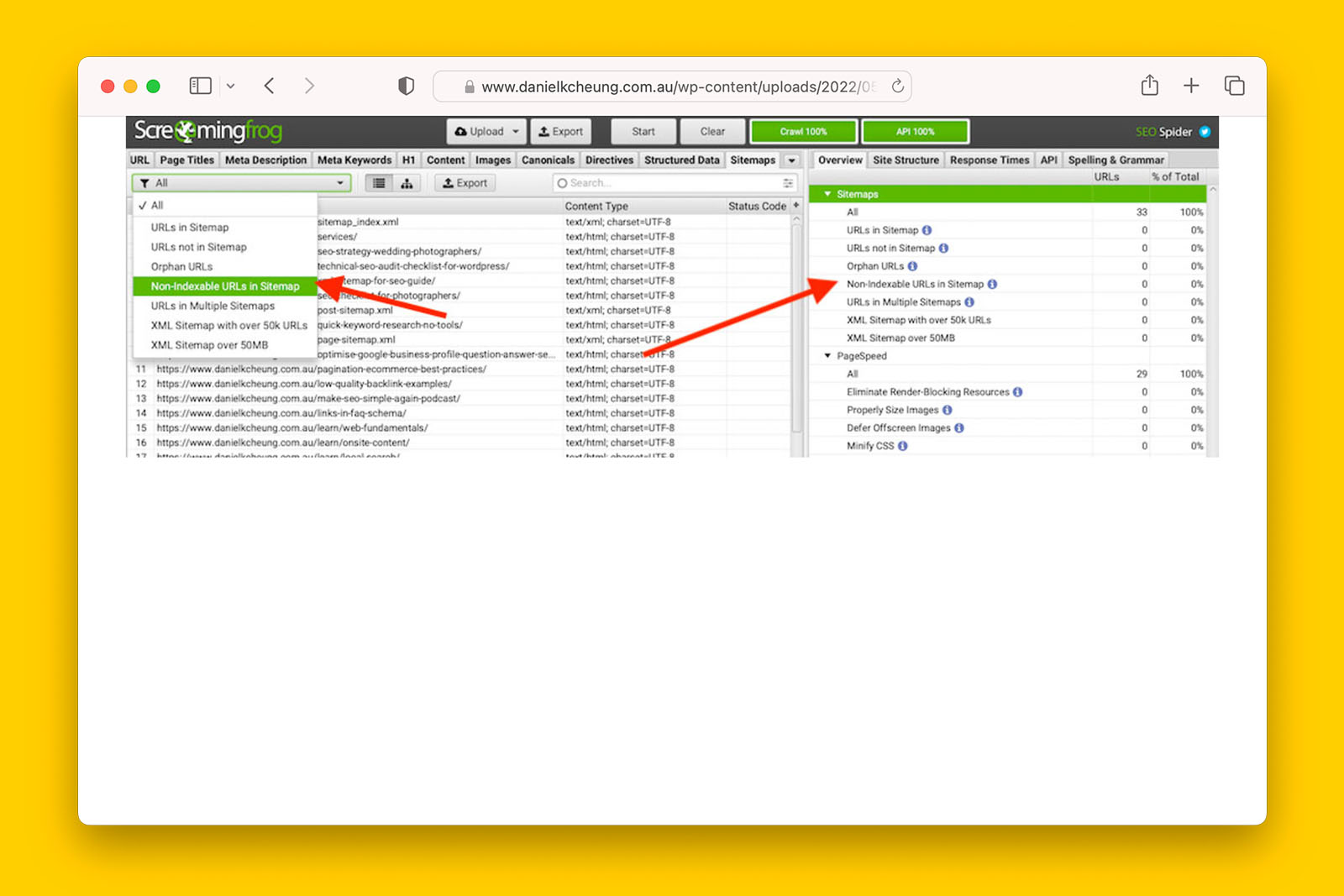

Are non-indexable URLS in the sitemap?

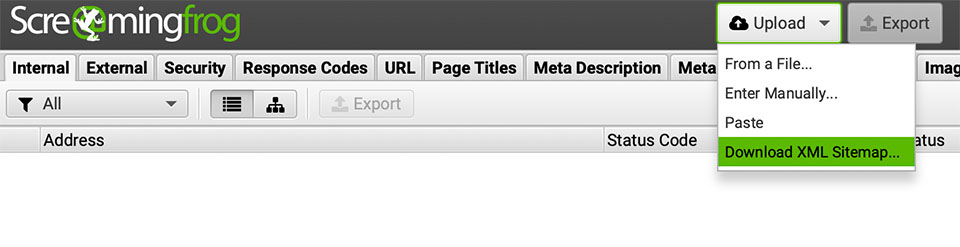

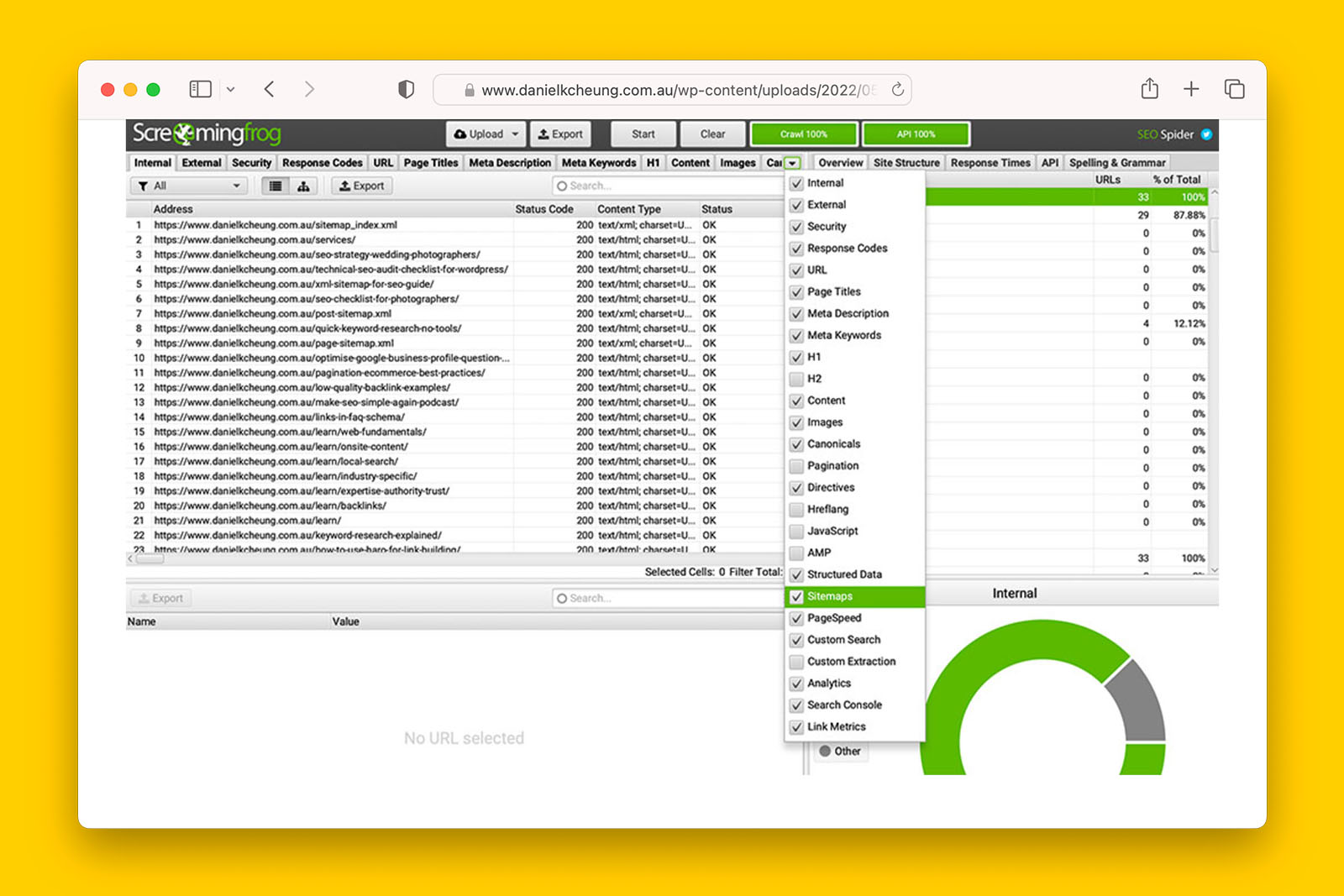

How to identify if non-indexable URLs are in a sitemap using Screaming Frog:

- Copy the full address of the sitemap file (e.g., domain/sitemap.xml)

- In Screaming Frog, go to MODE > LIST

- Click UPLOAD button and select DOWNLOAD XML SITEMAP then paste the full address of the sitemap from step #1 then click OK button

- Click OK button and this will initiate Screaming Frog to crawl all the URLs discovered from the sitemap

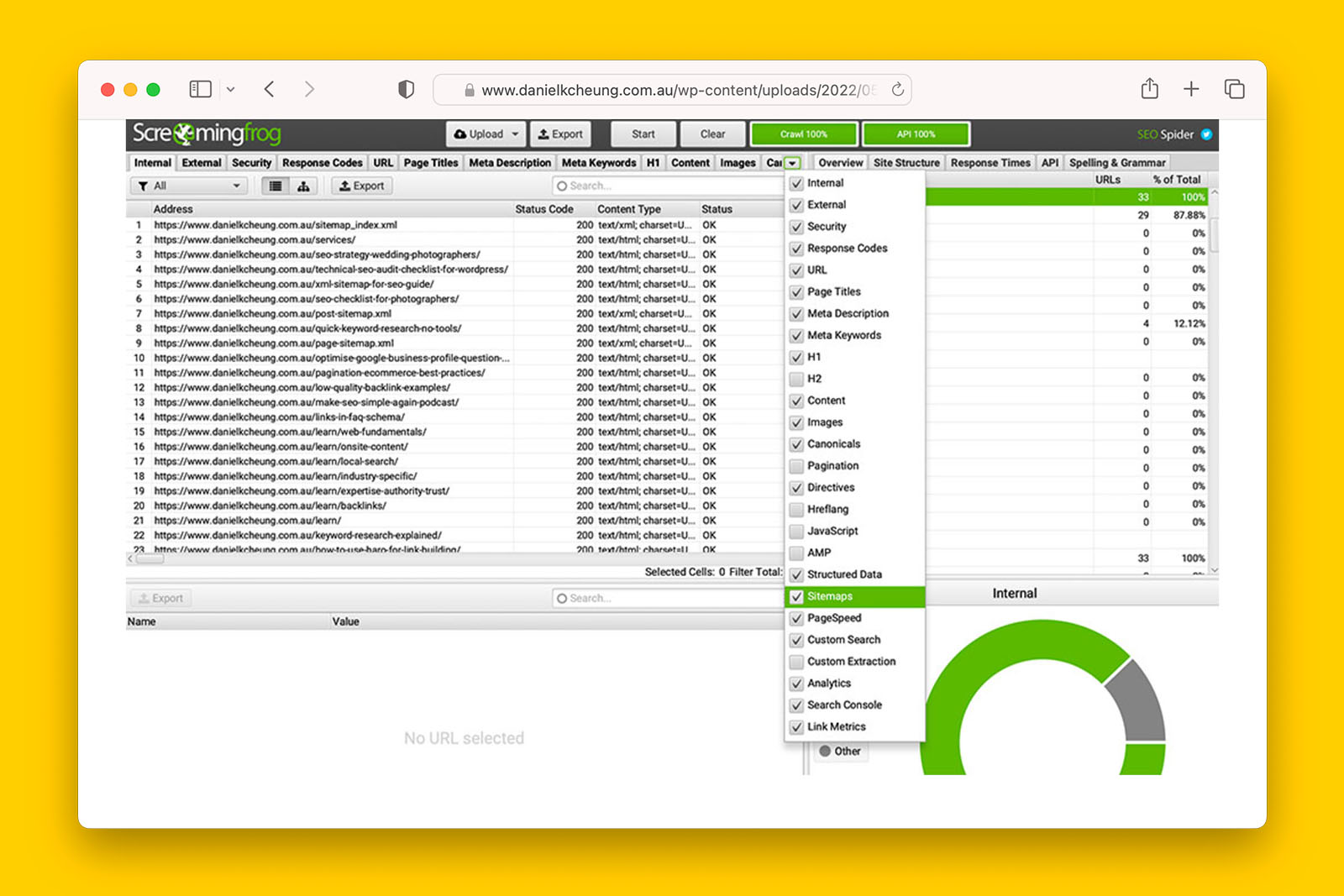

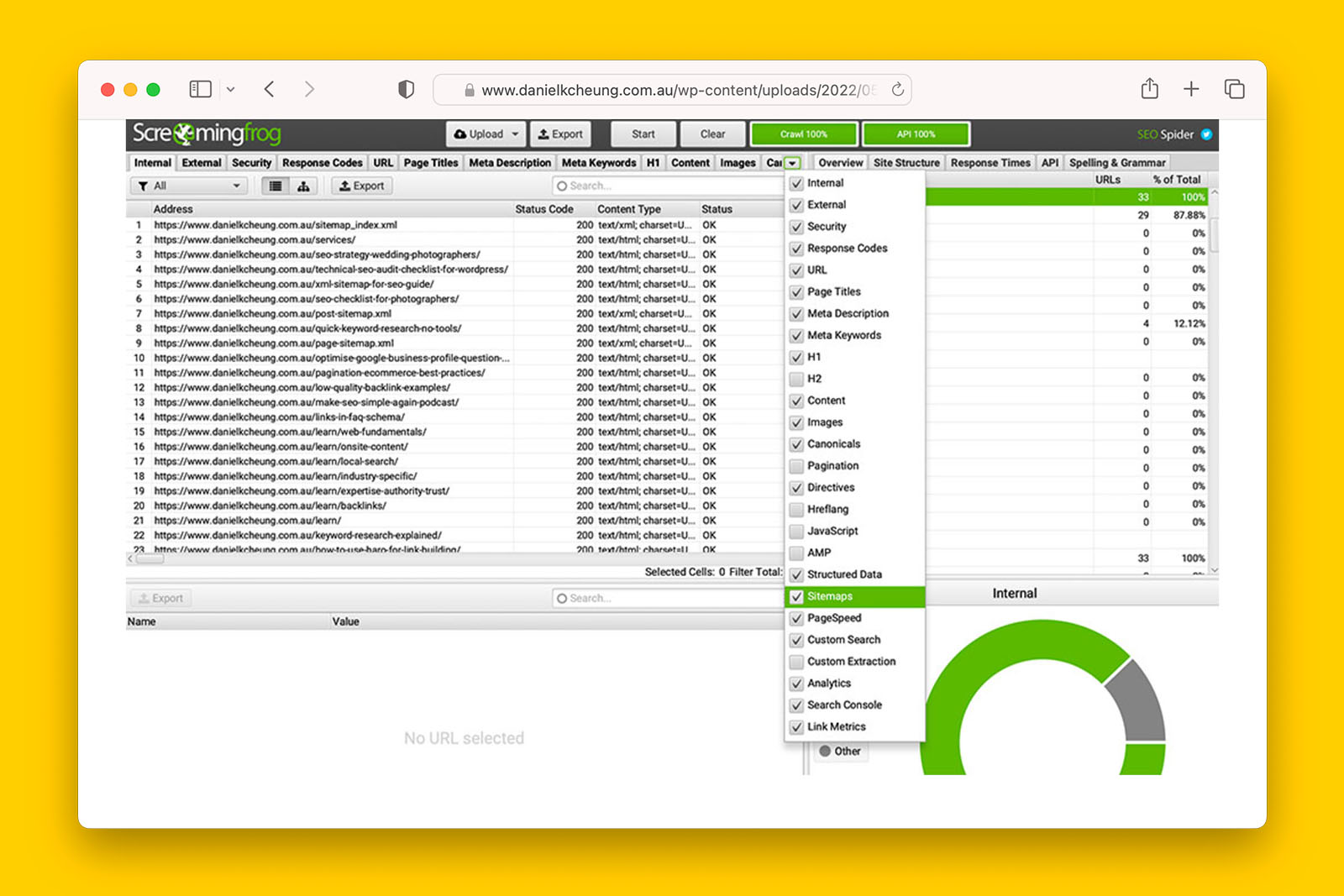

- Upon crawl completion, go to SITEMAP tab

Note: if you do not see SITEMAP tab, click on the toggle to reveal a list of tabs

- In OVERVIEW tab, you will see the total number of non-indexable URLs that have been included in the sitemap:

- If the number of non-indexable URLs in sitemap > 0, check ‘yes’ in the spreadsheet

- If the number of non-indexable URLS in sitemap = 0, check ‘no’ in the spreadsheet

- You can also extract a full list of all these non-indexable URLs that are currently in the sitemap.

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, this means that there are non-indexable URLs in the sitemap(s). If you see a high number of 301 and 404 URLs in the sitemap, investigate how the sitemap is generated. For most WordPress sites with Yoast, sitemaps are generated and updated automatically.

>> If ‘no’, only indexable URLs are found in the sitemap. This is usually a good thing and indicates that any crawling or indexing issues the site is experiencing is not due to sitemap inefficiencies.

Recommended reading:

Are there URLs that are blocked in robots.txt that are found in the sitemap?

How to check if URL blocked in robot.txt is included in a sitemap in Screaming Frog:

- Follow steps #1-8 in ‘how to identify if non-indexable URLs are in a sitemap using Screaming Frog’

- Navigate to the SITEMAPS tab and look at the INDEXABILITY STATUS:

- If you see “blocked by robots.txt”, check ‘yes’ in the spreadsheet

- If you see “canonlicalized”, “noindex” or if there are no URLs shown in the tab, check ‘no’ in the spreadsheet

Possible answers:

- yes

- no

- not applicable

What this means:

Note: Google can index URLs that have been blocked in robots.txt – you will see these in Search Console’s COVERAGE tab as “Valid with warning”.

> If ‘yes’, there are blocked URLs contained in the sitemap. Investigate whether these URLs should be blocked in robots.txt or whether or not these URLs should be excluded from the sitemap.

>> If ‘no’, there are no URLs blocked in robots.txt found in the sitemap.

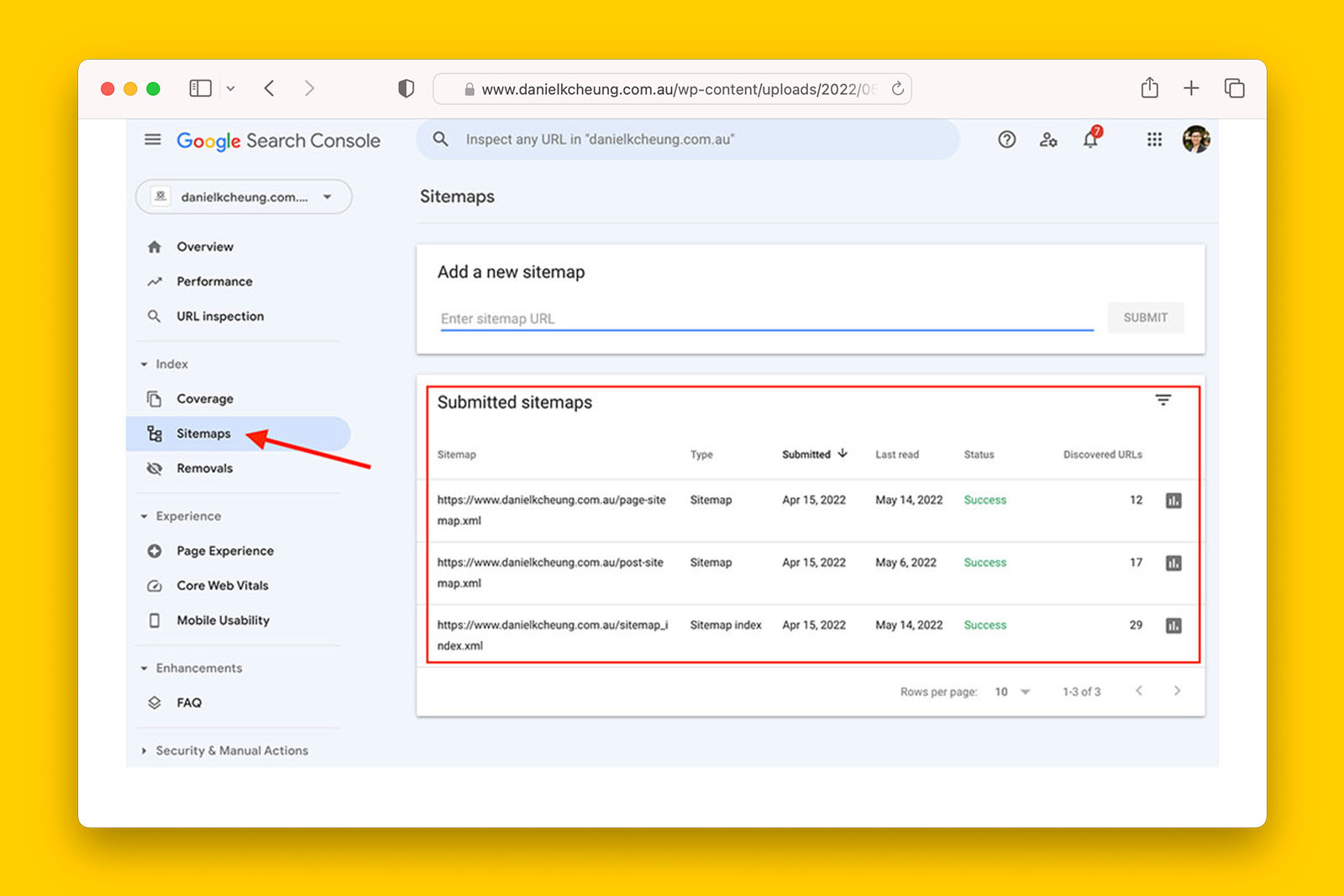

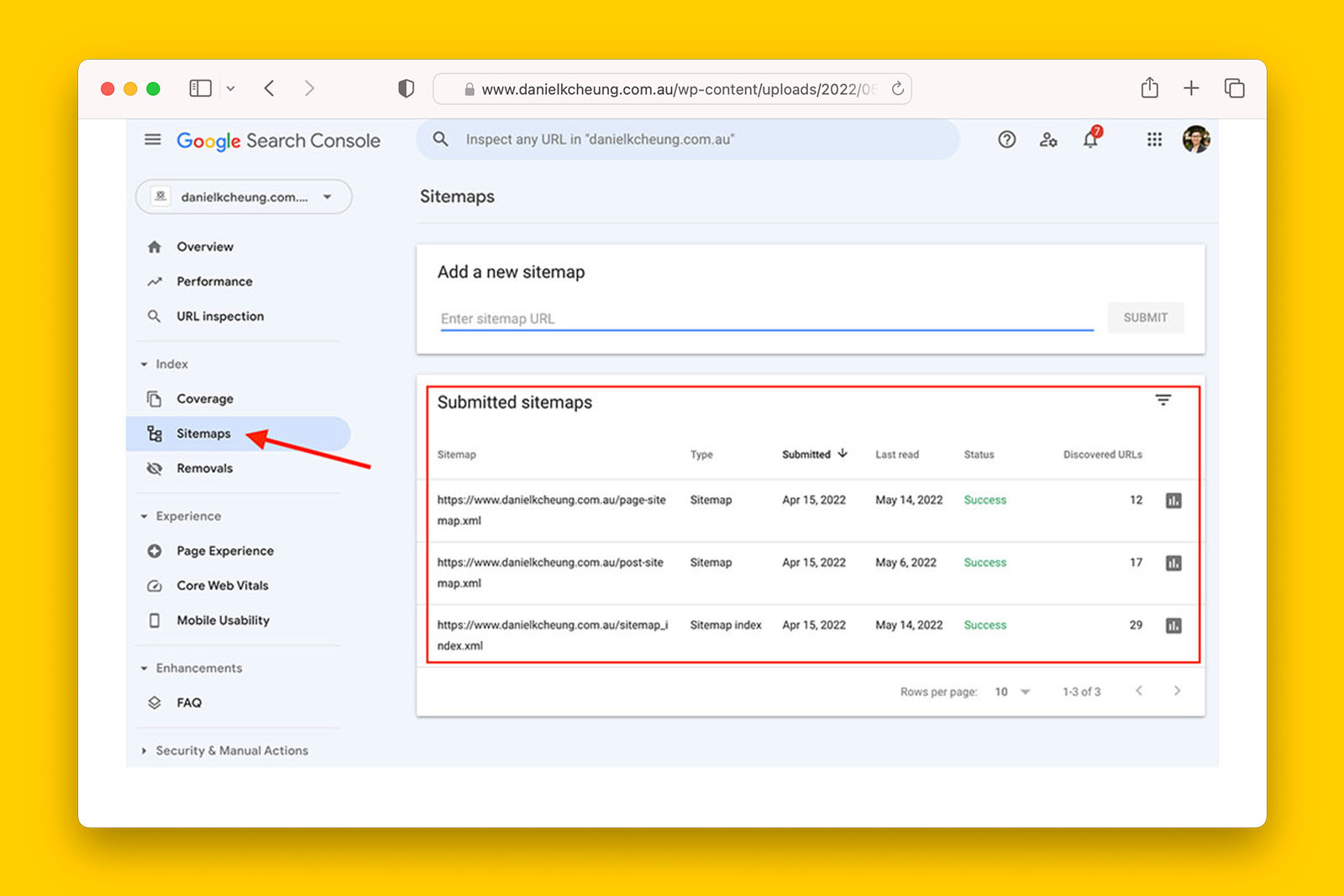

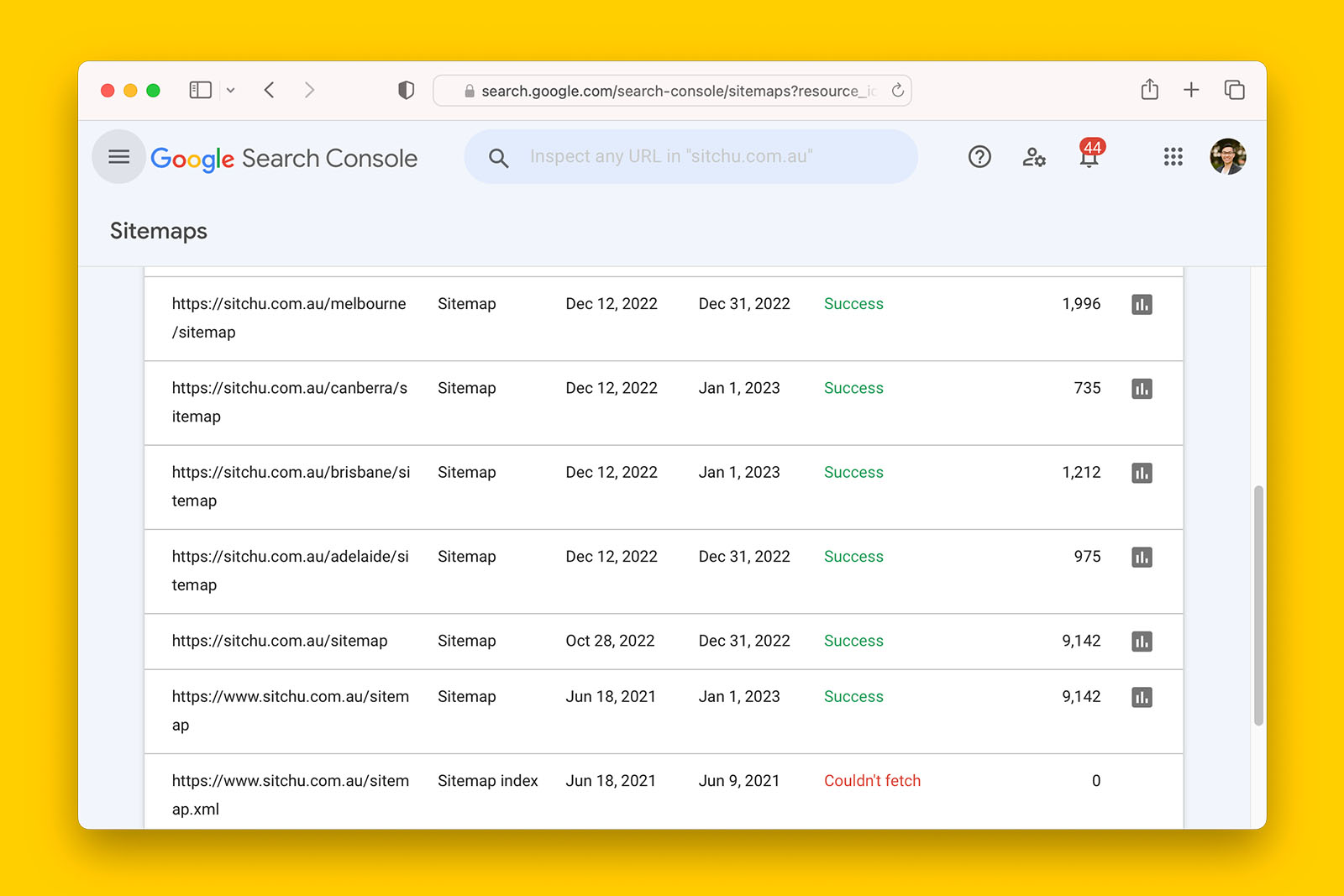

Have sitemaps been submitted to Google Search Console?

How to check if sitemaps have been submitted to GSC:

- Log into Google Search Console

- Go to INDEX > SITEMAPS

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, sitemaps have been submitted to Search Console. Now check if they have been processed.

>> If ‘no’ and if a sitemap does exist, manually submit the sitemap URL to GSC.

Recommended reading:

- ‘submit your sitemap to Google’ by Google Search Console

- ‘XML sitemap best practices for SEO so that you can get the most out of them’ by Daniel K Cheung

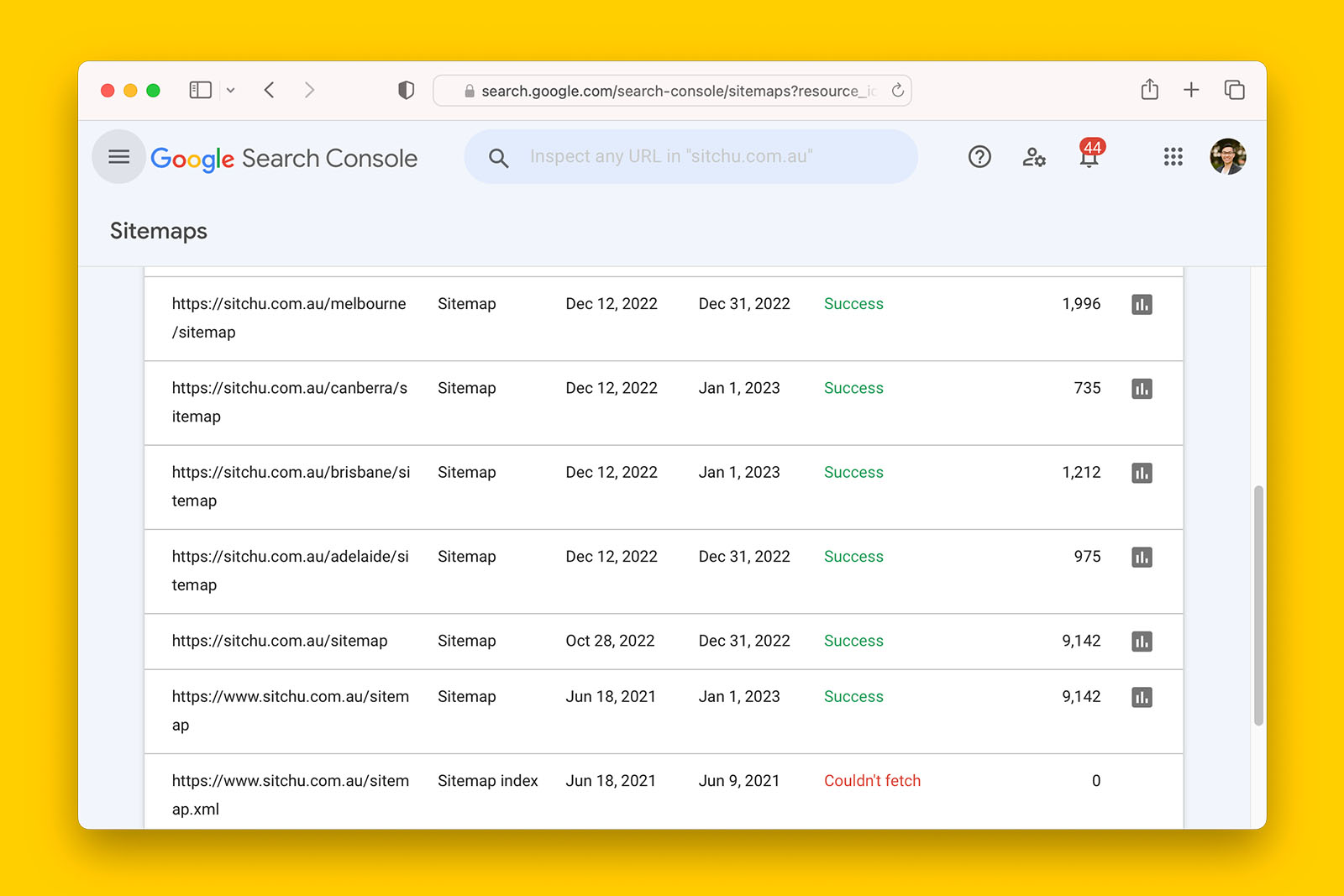

Has Google Search Console processed the submitted sitemap(s)?

How to confirm if submitted sitemaps have been proceed by GSC:

- Log into Google Search Console

- Go to INDEX > SITEMAPs

- Look at the STATUS column

- If you see “Success” and DISCOVERED URLS > 0, check ‘yes’ in the spreadsheet

- If you see “error”, check ‘no’ in the spreadsheet

- If you see “processing’, ‘check ‘no’ in the spreadsheet

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, URLs in the sitemap has been processed by GSC.

>> If ‘no’, GSC has encountered an error with crawling the URLs in the submitted sitemap file or it has not yet processed the file yet.

Recommended reading:

- ‘sitemap errors’ by Search Console Help

Have paginated URLs been included in the sitemap(s)?

How to check if paginated URLs are in a sitemap using Screaming Frog:

- Copy the full address of the sitemap file (e.g., domain/sitemap.xml)

- In Screaming Frog, go to MODE > LIST

- Click UPLOAD button and select DOWNLOAD XML SITEMAP

- Paste the full address of the sitemap from step #1 then click OK button

Note: it will take Screaming Frog a few seconds to extract all URLs contained in one ore more sitemap files

- Click OK button and this will initiate Screaming Frog to crawl all the URLs discovered from the sitemap

- Upon crawl completion, go to SITEMAP tab

Note: if you do not see SITEMAP tab, click on the toggle to reveal a list of tabs

- In OVERVIEW tab, click on ALL to reveal all URLs included in the sitemap(s)

- Scan for pagination URLs in the ADDRESS column:

- If you find paginated URLs, check ‘yes’ in the spreadsheet

- If there are no paginated URLs, check ‘no’ in the spreadsheet

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, there are paginated URLs in the sitemap – this not ideal. Consider removing them because URLs in the sitemap should only contain pages that you want to rank – and paginated series do not usually fit this criteria. That is, the deeper URLs found in paginated series tend to be more important than the paginated series. The one exception to this rule is including the “View All” URL in the sitemap.

>> If ‘no’, paginated URLs are not in sitemap.

Recommended reading:

- ‘pagination best practices for Google’ by Google Search Central

- ‘pagination attributes’ by ContentKing

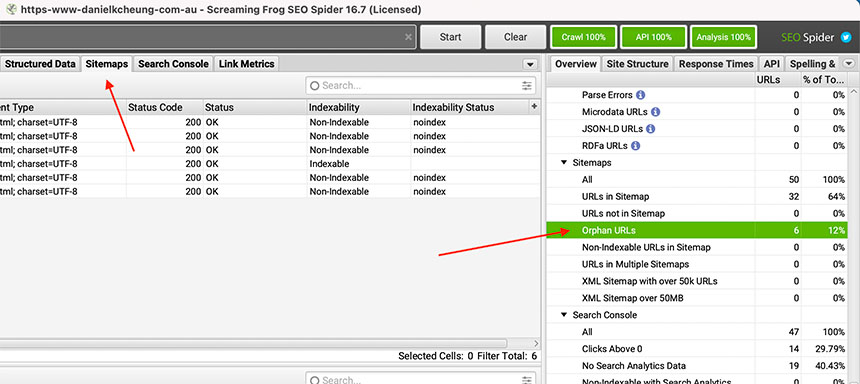

Are there orphaned URLs in the sitemap?

Note: the website you’re auditing will need to have a sitemap for you to check this

How to find if there are orphan URLs are in a sitemap using Screaming Frog:

- Copy the full address of the sitemap file (e.g., domain/sitemap.xml)

- In Screaming Frog, go to MODE > LIST

- Click UPLOAD button and select DOWNLOAD XML SITEMAP

- Paste the full address of the sitemap from step #1 then click OK button

Note: it will take Screaming Frog a few seconds to extract all URLs contained in one ore more sitemap files

- Click OK button and this will initiate Screaming Frog to crawl all the URLs discovered from the sitemap

- Upon crawl completion, go to CRAWL ANALYSIS > START

- Navigate to the SITEMAPS tab and on the right hand side panel you will see a number count of ORPHAN URLS in sitemap:

- If you see a number greater than 0, check ‘yes’ in the spreadsheet

- If you see 0, check ‘no’ in the spreadsheet

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, there are URLs that have no internal links pointing to them. This means that it is very difficult for search engines to discover these pages on the website. Confirm if these pages are important and add internal links to these orphan URLs from the navigation menu, homepage, or other pages that get organic traffic.

>> If ‘no’, all URLs have another URL linking to them. However, if you are experiencing indexing issues, find more opportunities for these URLs to be internally linked from.

Are there 301 URLs in the sitemap?

Note: the website you’re auditing will need to have a sitemap for you to check this

How to find 301 URLs in a sitemap using Screaming Frog:

- Copy the full address of the sitemap file (e.g., domain/sitemap.xml)

- In Screaming Frog, go to MODE > LIST

- Click UPLOAD button and select DOWNLOAD XML SITEMAP

- Paste the full address of the sitemap from step #1 then click OK button

Note: it will take Screaming Frog a few seconds to extract all URLs contained in one ore more sitemap files

- Click OK button and this will initiate Screaming Frog to crawl all the URLs discovered from the sitemap

- Upon crawl completion, go to CRAWL ANALYSIS > START

- When the analysis is done, go to SITEMAP tab

Note: if you do not see SITEMAP tab, click on the toggle to reveal a list of tabs

- In OVERVIEW tab, you will see the total number of non-indexable URLs that have been included in the sitemap

- Click on STATUS CODE to sort the column in ascending or descending order

- Scan for any 301 results:

- If you see one or more 301 results in the STATUS CODE column, check ‘yes’ in the spreadsheet

- If you see no 301 results in the STATUS CODE column, check ‘no’ in the spreadsheet

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, there are 301 URLs in the sitemap. Generally speaking, only indexable URLs should be in a sitemap (i.e., status code 200 only). However, in some instances, it is good practice to have a separate sitemap of 301 redirects so that they can be crawled, mapped and monitored easily. Generally speaking, having 301 URLs in a sitemap is not cause for concern but can point to a history of technical issues that have not been resolved.

>> If ‘no’, there are no 301 URLs in the sitemap.

Recommended reading:

Are there 404 URLs in the sitemap?

Note: the website you’re auditing will need to have a sitemap for you to check this

How to find 404 URLs in a sitemap using Screaming Frog:

- Copy the full address of the sitemap file (e.g., domain/sitemap.xml)

- In Screaming Frog, go to MODE > LIST

- Click UPLOAD button and select DOWNLOAD XML SITEMAP

- Paste the full address of the sitemap from step #1 then click OK button

Note: it will take Screaming Frog a few seconds to extract all URLs contained in one ore more sitemap files

- Click OK button and this will initiate Screaming Frog to crawl all the URLs discovered from the sitemap

- Upon crawl completion, go to CRAWL ANALYSIS > START

- When the analysis is done, go to SITEMAP tab

Note: if you do not see SITEMAP tab, click on the toggle to reveal a list of tabs

- In OVERVIEW tab, you will see the total number of non-indexable URLs that have been included in the sitemap

- Click on STATUS CODE to sort the column in ascending or descending order

- Scan for any 404 results:

- If you see one or more 404 results in the STATUS CODE column, check ‘yes’ in the spreadsheet

- If you see no 404 results in the STATUS CODE column, check ‘no’ in the spreadsheet

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, there are 404 URLs in the sitemap. Generally speaking, only indexable URLs should be in a sitemap (i.e., status code 200 only). However, in some instances, it is good practice to have a separate sitemap of deleted URLs so that they can be crawled, mapped and monitored easily. Generally speaking, having 404 URLs in a sitemap is not cause for concern and do not directly contribute towards indexing issues. But seeing a large number of 404 status code URLs in a sitemap can point to a history of technical issues that have not been resolved.

>> If ‘no’, there are no 404 URLs in the sitemap.

Recommended reading:

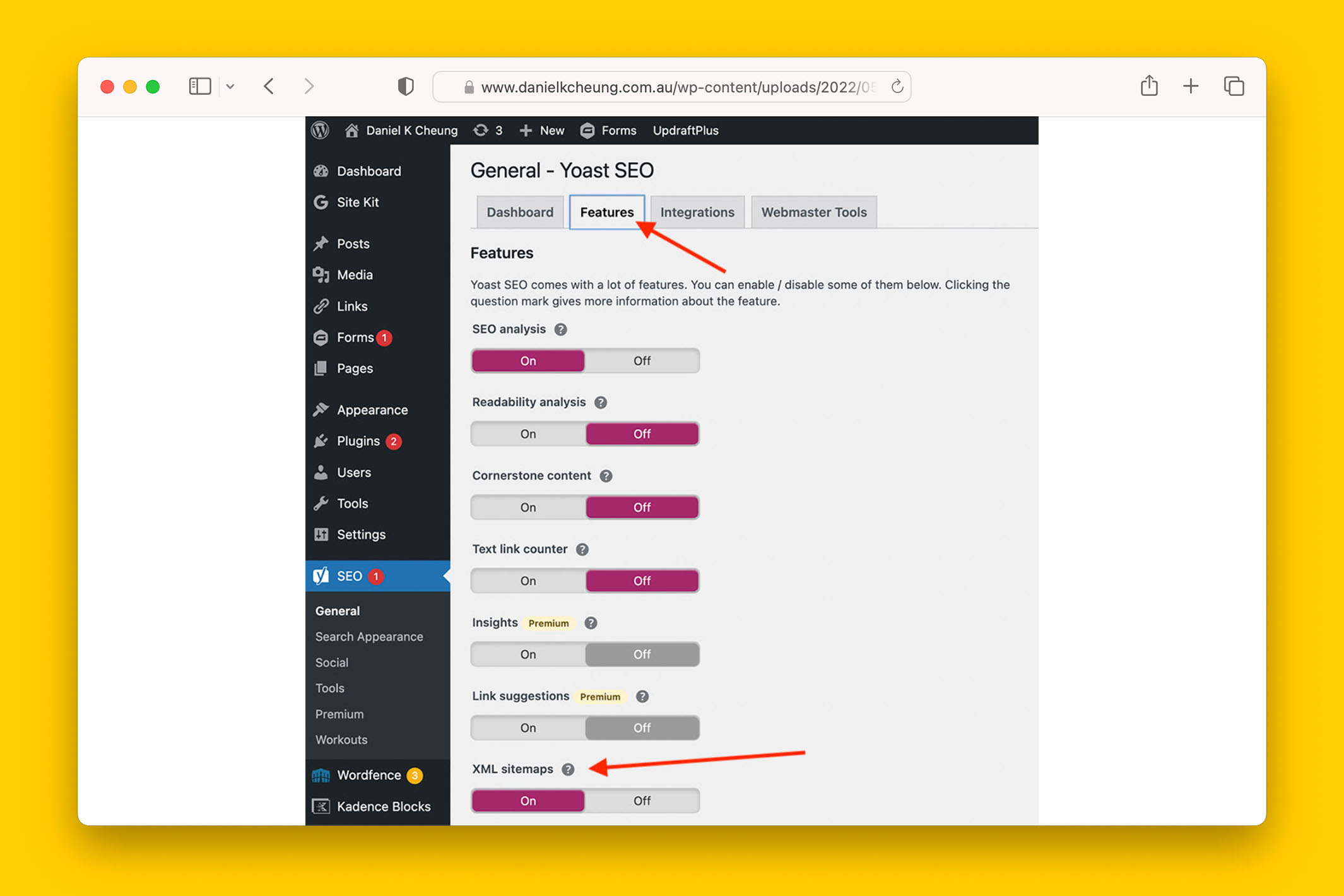

How are sitemaps generated on the website?

How to find out how sitemaps are generated:

- Log into into WordPress dashboard

- Look for an SEO plugin such as Yoast, AIOSEO, Rankmath etc

- Check if the plugin has been tasked with creating sitemaps

Do any of the sitemaps in Google Search Console show errors?

How to check if there are sitemap errors in GSC:

- Log into Google Search Console

- Go to INDEX > SITEMAPS

- Check the STATUS column for errors

- If an error is reported, check ‘yes’ in the spreadsheet

- If no errors are reported, check ‘no’ in the spreadsheet.

Possible answers:

- yes

- no

- not applicable

What this means:

> If ‘yes’, check if the submitted sitemap is relevant and current. If it is, investigate why GSC is reporting an error as this may give you a clue as to what is blocker to efficient crawling and indexing for the website.

>> If ‘no’, there are no sitemap errors reported by Search Console.