XML sitemap best practices for SEO so that you can get the most out of them

Key takeaways:

- Sitemaps are very useful because they enable search engines to discover all pages on a site and to download them quickly when they change (source)

- A sitemap is not needed for search engines to crawl and index a website’s pages

- You can have more than one sitemap for your website

- Google ignores <priority> and <changefreq> values

- The position of a URL in a sitemap is not important (Google does not crawl URLs in the order they appear in your sitemap)

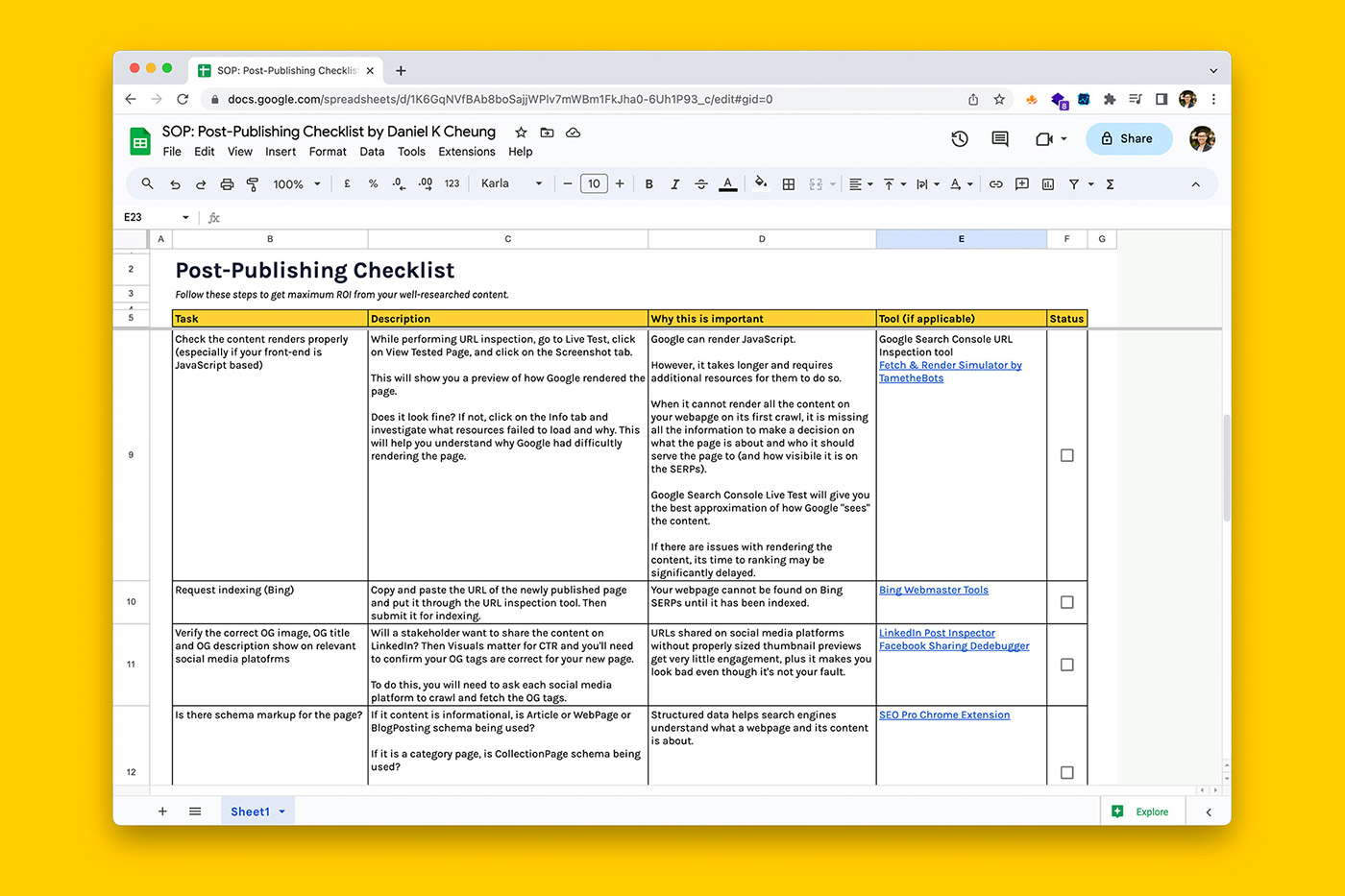

- Manually submit your sitemaps to Google Search Console and Bing Webmaster

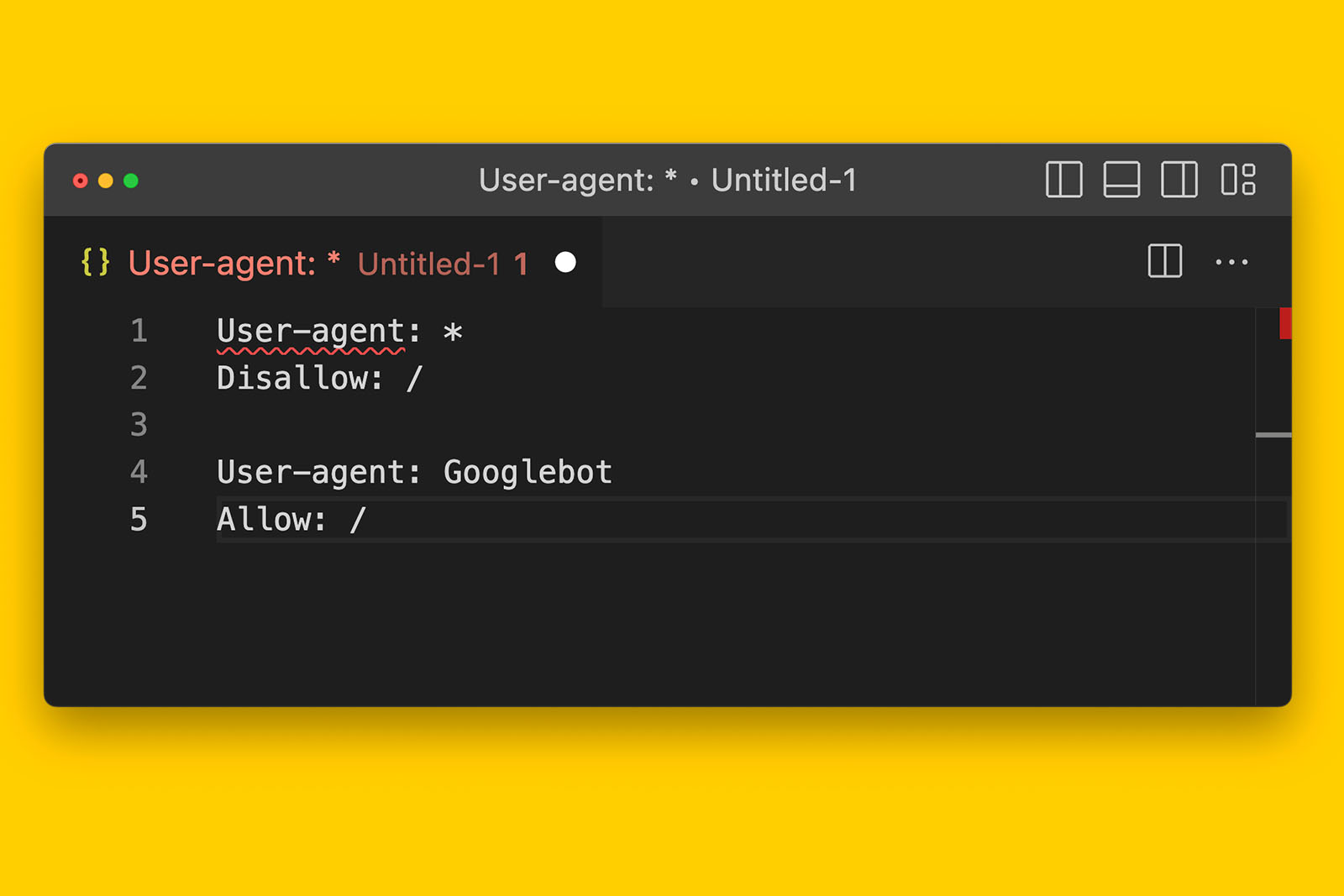

- You can include the URL to your sitemap(s) in your robots.txt file.

Crawling and indexing the Internet is a complex process that search engines undertake. To make this easier and more efficient, sitemaps help search engines know where to find the important pages on a website.

What is a sitemap?

A sitemap is a file that tells search engines (like Google) where to find all the important pages on your website. This is so they can crawl your website more efficiently. For multi-lingual websites, sitemaps can tell search engines if there are alternate language versions of the page.

What are the advantages of having a sitemap?

Search engines don’t need a sitemap to discover and index a website’s pages. But having a sitemap can make it a whole lot easier for them.

What is the difference between a HTML and XML sitemap?

Sitemaps can be in XML and HTML format and sitemaps can be created for webpages, images, videos, and news content. Both XML and HTML sitemaps are be crawled by search engines.

XML sitemaps are created for search engine crawlers only. That is, even though the sitemap can be accessed by anyone, it is not shown in search result pages or linked from navigational menus or body content. In short, XML sitemaps are not created for human consumption and the URLs in the XML file are not clickable.

This is how HTML sitemaps differ from XML sitemaps. Unlike a XML sitemap, HTML sitemaps are designed to be seen and used by people browsing the web.

What are the benefits of a HTML sitemap?

In my opinion, there are no benefits of using a HTML sitemap over one or more XML sitemaps. HTML sitemaps are huge and can be overwhelming for the user. They also require significant effort to maintain compared to automating a dynamic XML sitemap via a third-party such as Yoast.

To be honest, it almost seems as though HTML sitemap advocates don’t want competitors easily crawling their site.

Let me break down why the major advantages of HTML sitemaps fall flat.

One of the benefits of a HTML sitemap is that it can make the organisation of large websites with thousands of URLs easier. While this is true, you can achieve the same thing by splitting a XML sitemap into multiple sitemaps.

Another cited benefit of a HTML sitemap is that a HTML sitemap can speed the work of search engine crawlers. The argument is that a XML sitemap is a dump of links and while this may be true if you were to submit an index sitemap, you can easily achieve efficient crawling of your URLs by splitting your XML sitemap.

Some people argue that a HTML sitemap will effectively cut orphaned pages to zero. And while a XML sitemap cannot do this, all you have to do is to internally link relevant pages to each other.

What does a sitemap look like?

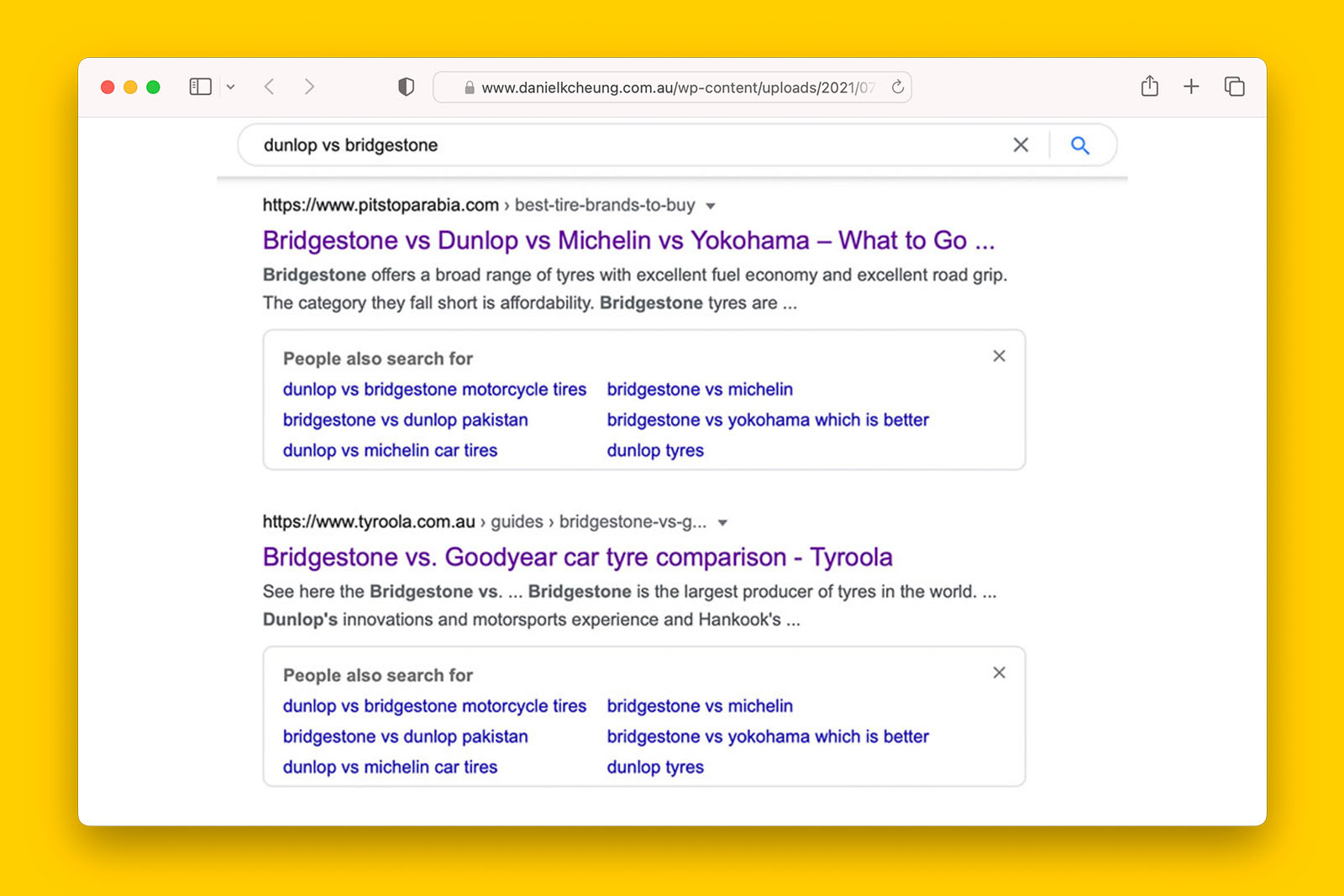

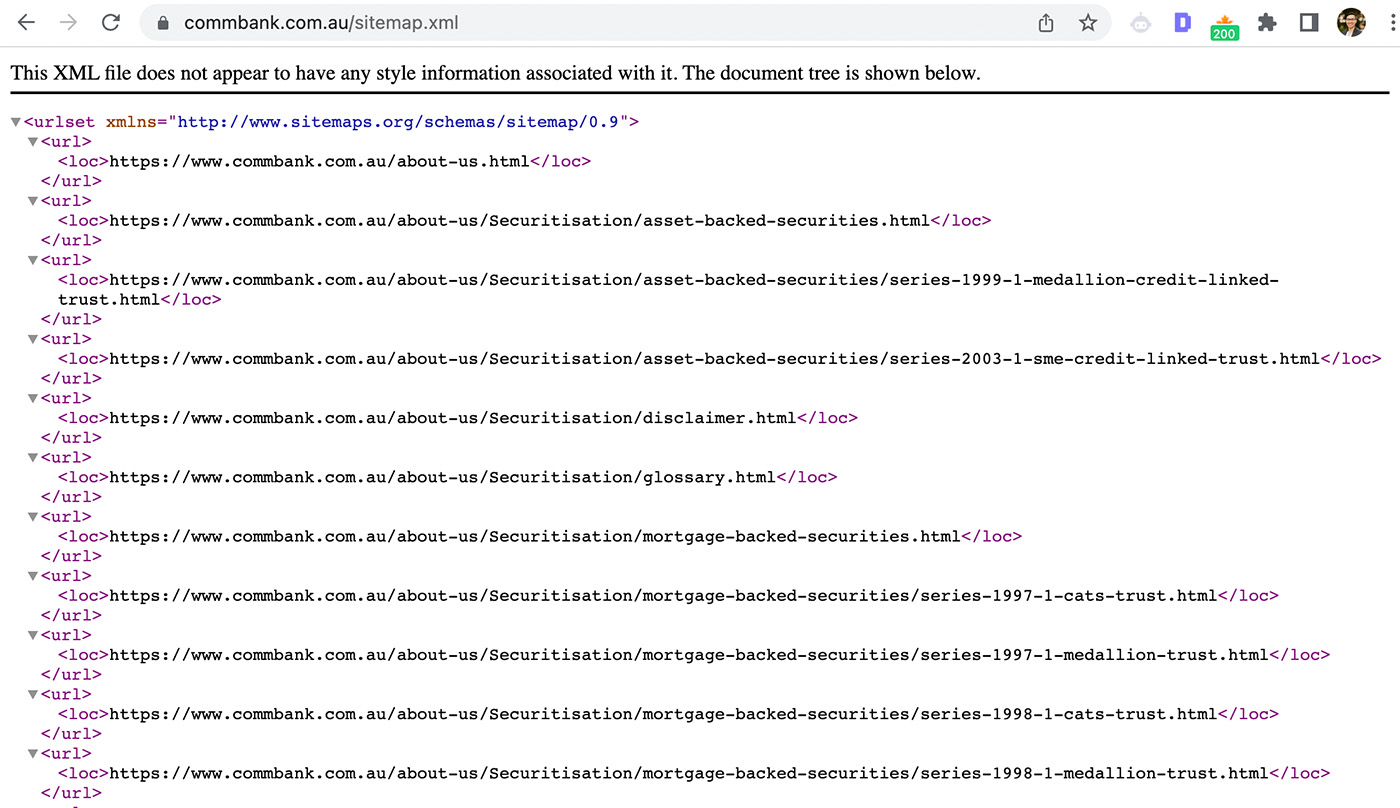

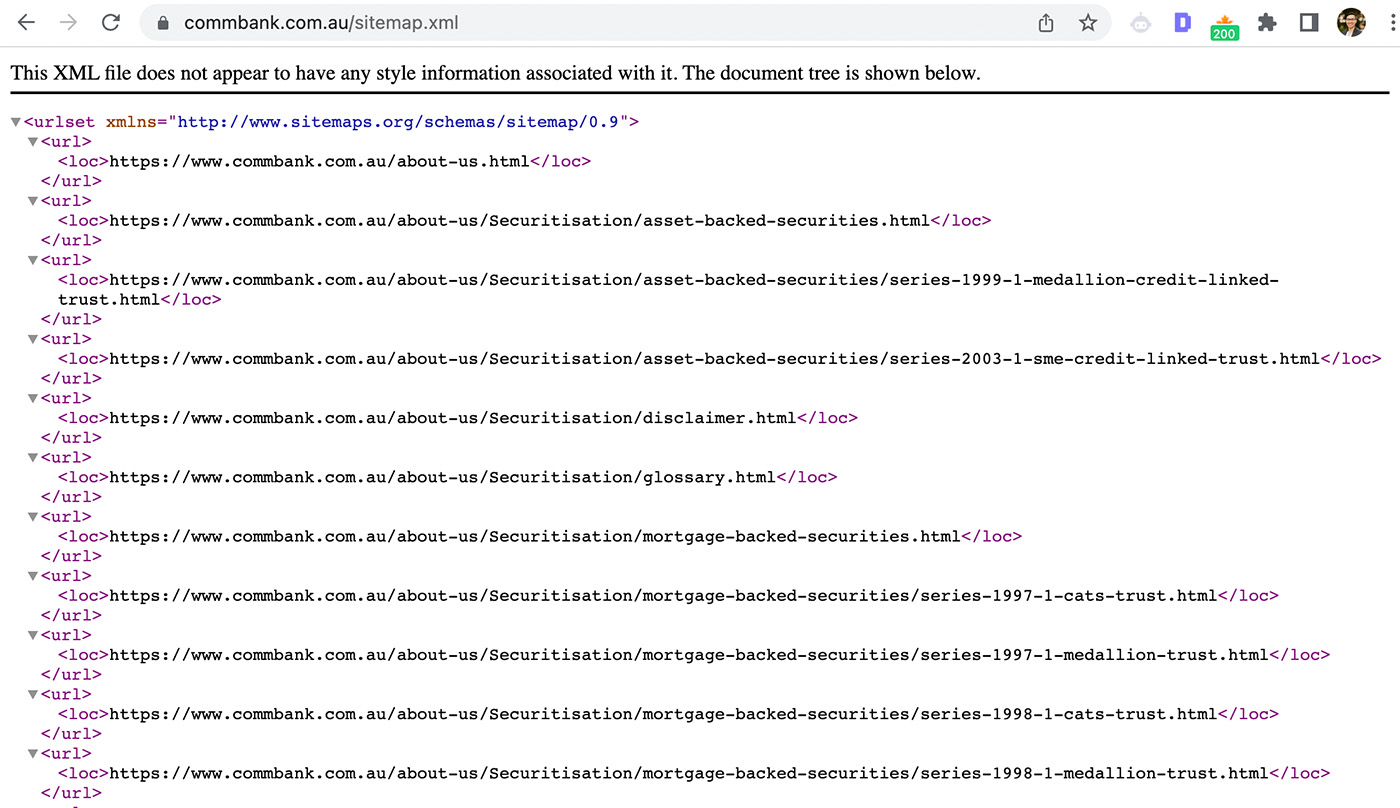

This is what a XML sitemap usually looks like:

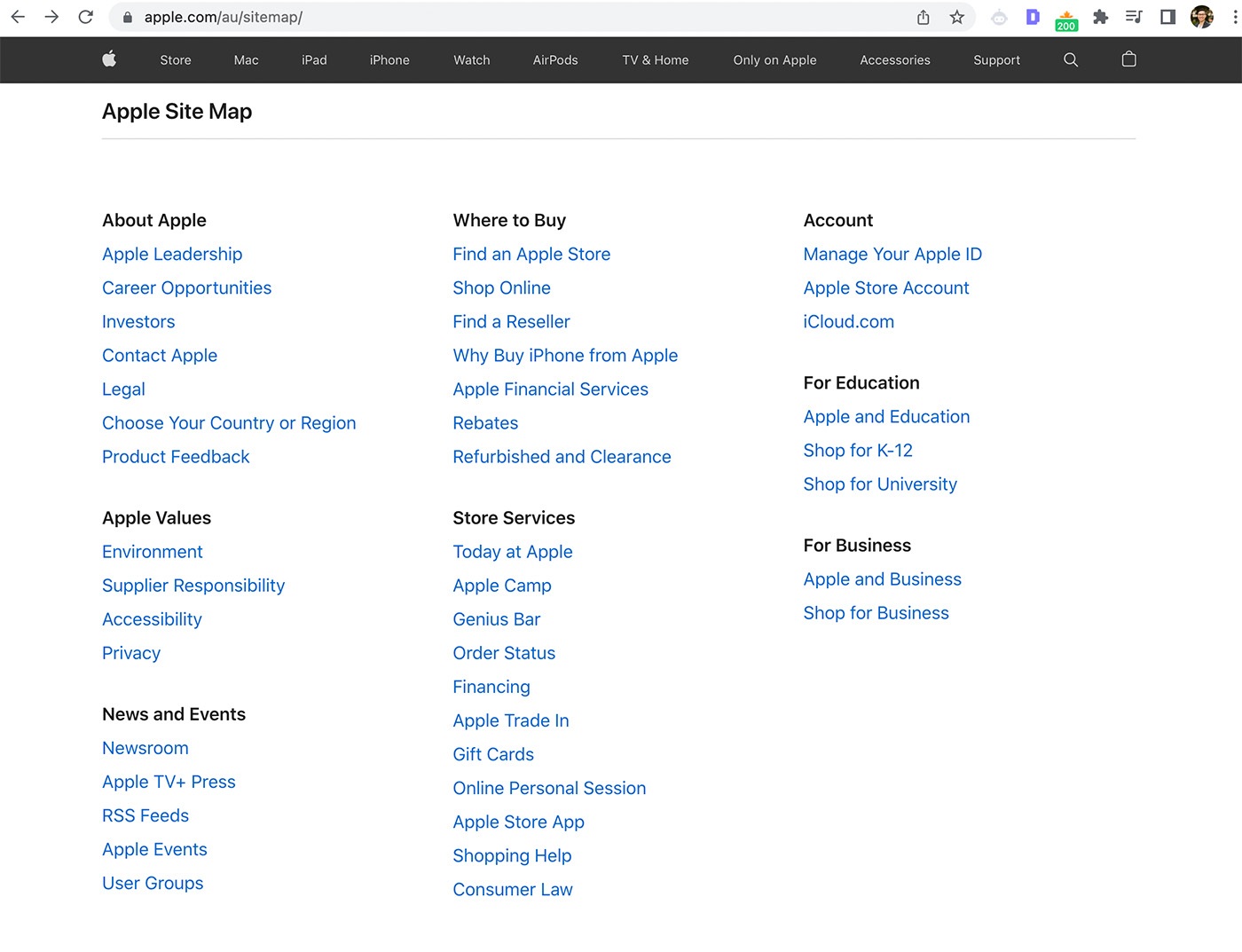

This is what a HTML sitemap in the real world looks like:

What URLs should be in a sitemap?

Your sitemap should only contain canonical URLs that are indexable.

This means that URLs with 301, 302, 404 status codes should not be in a sitemap. This is because a sitemap is supposed to communicate to a search engine that these are the URLs that you want it to find and show to its users.

What this means in terms of sitemap best practices:

- When a page is deleted, the URL should be removed from the sitemap.

- When a page is redirected, the URL should be removed from the sitemap.

- When a new canonical URL is published, it should be added to the sitemap.

- When a page has been marked as noindex, the URL should be removed from the sitemap.

- Since Google ignores <priority> values, assigning a high priority to URLs will not help them get crawled or indexed quicker.

- URLs with query strings should not be submitted in your sitemap.

Following these best practice guidelines can be tedious when sitemaps are generated manually. This is because maintaining manual sitemaps requires dev time and developers rarely have spare time. As a result, you will often find non-indexable URLs in a manually generated XML sitemap.

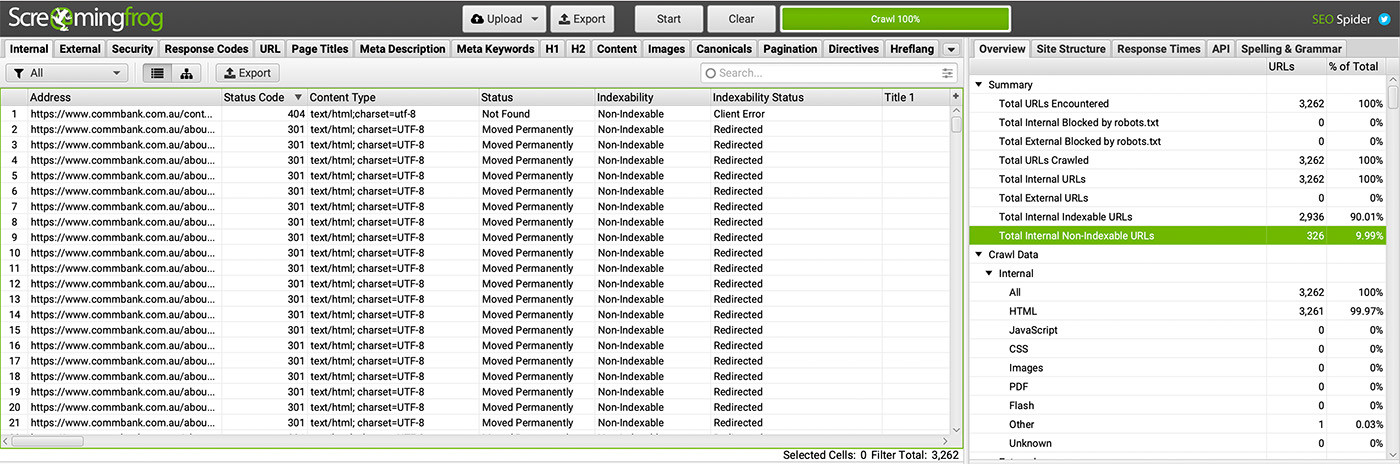

In the above screenshot, CommBank has submitted a total of 3,261 URLs in their sitemap.xml file.

Using Screaming Frog SEO Spider in list mode, we can see in the above screenshot that there are 326 non-indexable URLs in the sitemap, with 33 URLs having a noindex directive, one URL being a 404, and 160 redirected URLs.

This is odd and not ideal as sitemap best practice tells us that only URLs that you want crawled and indexed by search engines should be included.

This is the benefit of dynamically generated sitemap solutions because third-party plugins for popular CMS platforms can dynamically generate correct sitemaps based on your publishing activity.

For example, when you delete a blog post or page in WordPress, Yoast and Rankmath will automatically remove that URL from the sitemap. Similarly, when you apply a noindex meta tag on a blog post of page in WordPress, both Yoast and Rankmath will remove that URL from the XML sitemap.

Now before we move on, remember how I said that only indexable URLs should be included in a sitemap? This doesn’t mean that you cannot create and submit a separate sitemap with non-indexable URLs.

Why shouldn’t 404 status URLs be in a sitemap?

A 404 status code tells a search engine that the page is no longer available and when people land on a 404 error page from clicking on an organic result on the search results page, this causes user frustration because they can’t access what they want.

After a period of time, Google will de-index URLs with a 404 status code and it will no longer be discoverable from Google search.

Therefore, a page that no longer exists should not exist in your sitemap because it dilutes the trustworthiness of the sitemap. If the search engine encounters a large enough number of 404 codes in your sitemap, they may decide to ignore the entire sitemap. This is why your sitemap should not have pages that return a 404 status code.

Why shouldn’t 301 redirected URLs be in a sitemap?

Similar to HTTP 404 status codes, URLs with HTTP 301 status code do not technically exist because the user is taken to another URL via the redirect.

However, unlike 404s, having URLs with redirects in your sitemap is unlikely to decrease the search engine’s using it as a crawling and indexing signal.

Why shouldn’t URLs with query strings (URL parameters) be in a sitemap?

Parameterised URLs should not be your sitemap because they’re duplicates of the canonical URL. This doesn’t mean that parameterised URLs cannot be found on Google search because URLs with query strings can be crawled and indexed by search engines.

When a website has similar URLs, it increases the risk for a search engine to pick a version that you don’t want.

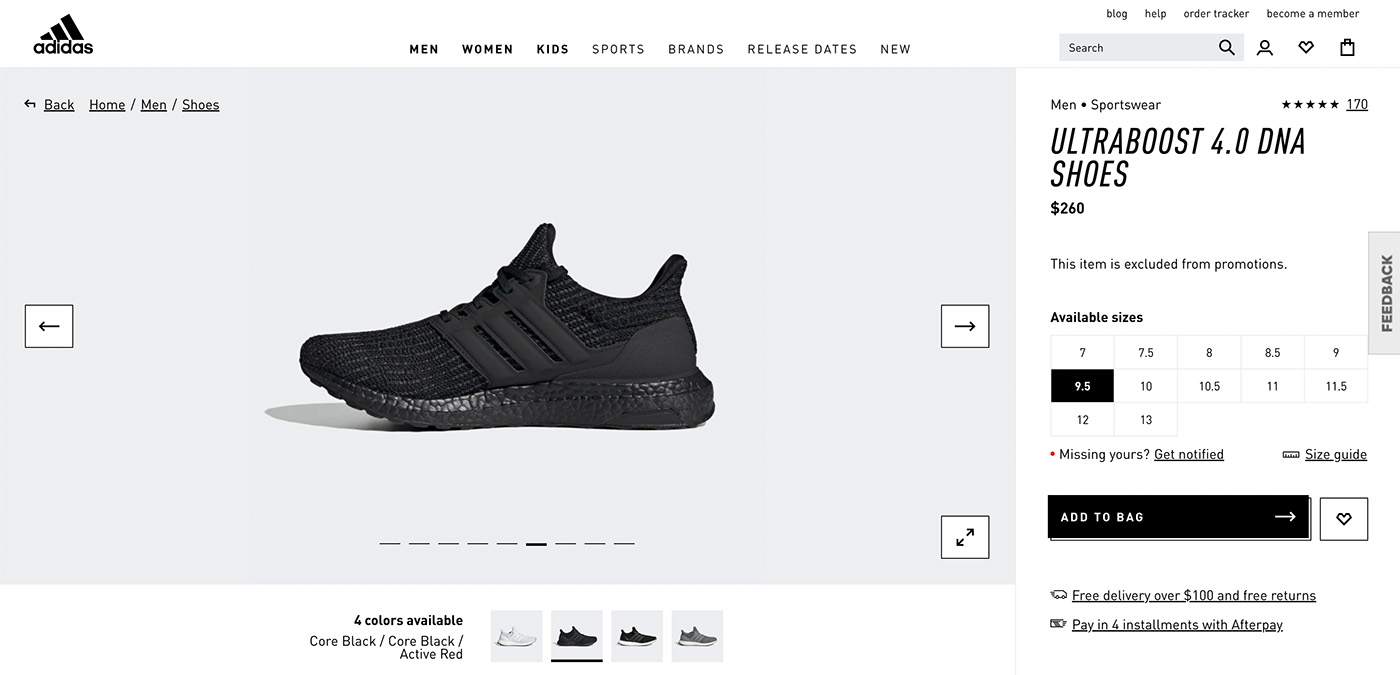

For example, on the Adidas website, when selecting the ‘Core Black” version of the Ultraboost 4.0 DNA, a query string is added to the URL:

https://www.adidas.com.au/ultraboost-4.0-dna-shoes/FY9318.html?forceSelSize=7.5This URL should not be included in the sitemap because it has identical content to the canonical URL which happens to be:

https://www.adidas.com.au/ultraboost-4.0-dna-shoes/FY9121.htmlWhy shouldn’t non-indexable URLs be in a sitemap?

Having non-indexable URLs in a sitemap sends confusing signals to a search engine. While indexable URLs in a sitemap does not guarantee that a search engine will crawl and index it, search engines do use XML sitemaps as a crawling and indexing signal. Therefore, it is best that your sitemap contain URLs that you want to be indexed.

How many URLs can be in a sitemap?

The single XML sitemap can have up to 50,000 URLs per file.

Should sitemaps be compressed?

Sitemaps do not need to be compressed.

One of the main reasons why sitemaps are compressed is to reduce its file size. While both Bing and Google have an upper limit of 50MB per XML sitemap, large sitemaps containing thousands URLs should be split into separate sitemap files.

Doing this will negate the need to compress sitemaps.

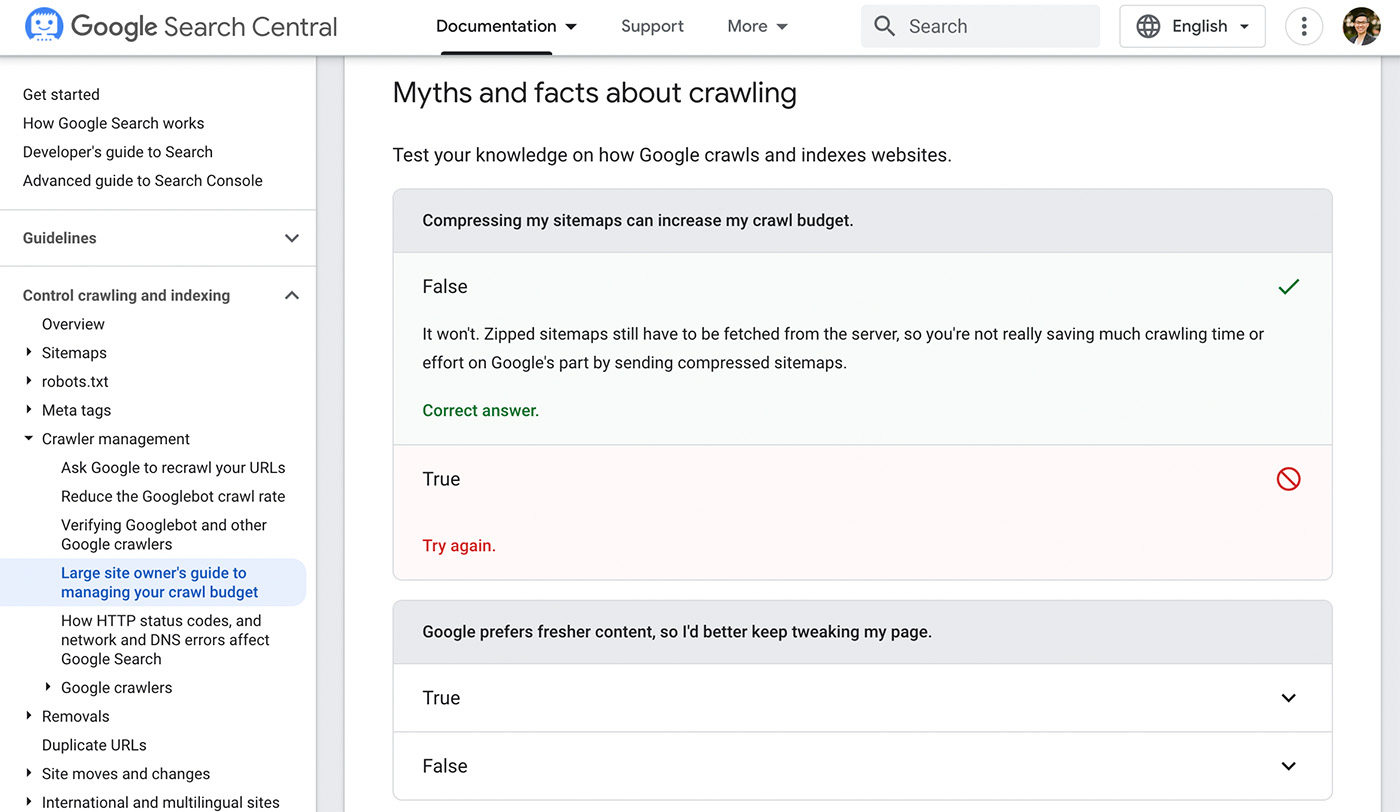

Another reason why some people recommend compressing sitemaps is because they think doing so will increase their crawl budget.

This is false (refer to the above screenshot from Google Search Central). Compressing a sitemap takes time and since it has no added benefit, all you’re achieving is wasting time.

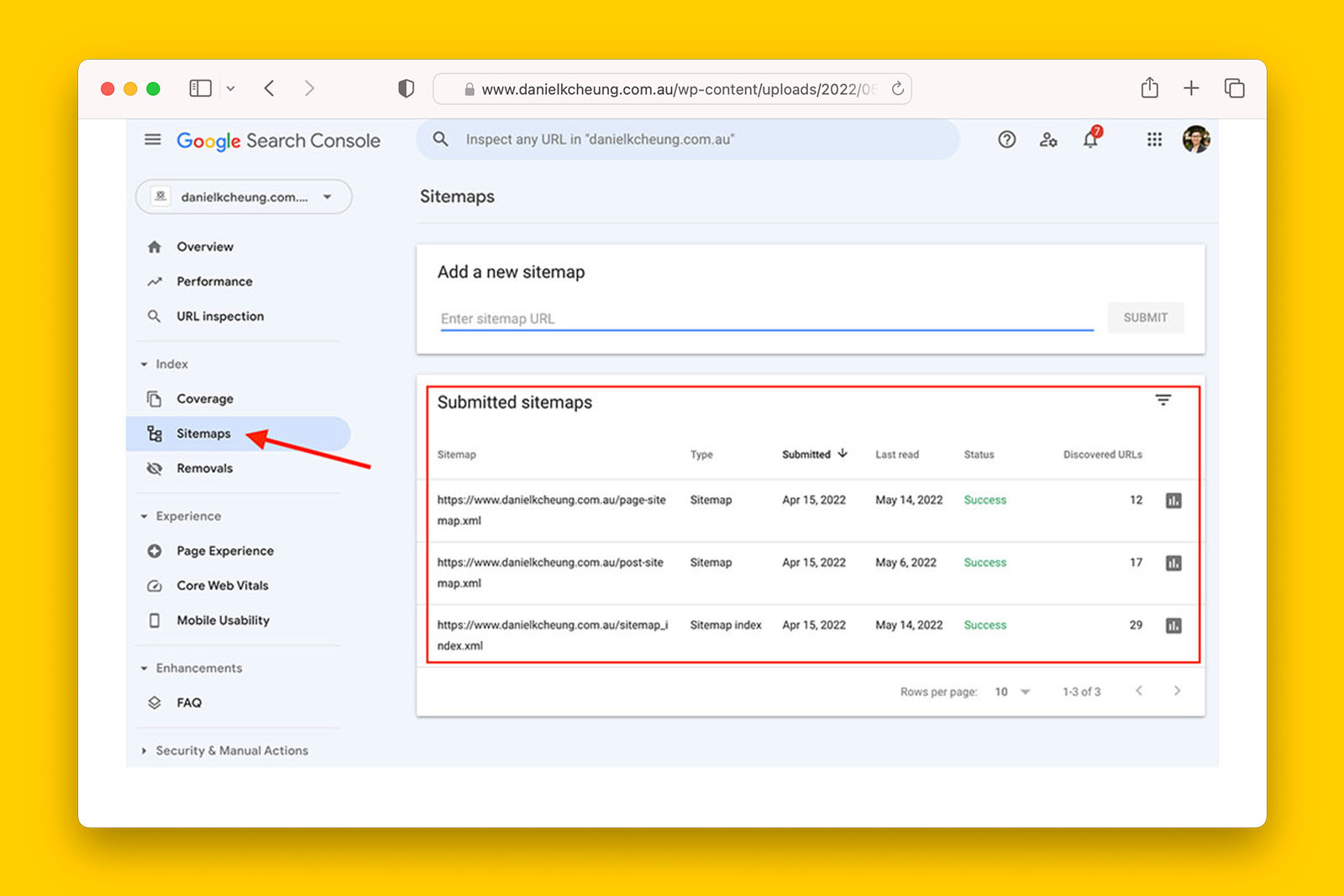

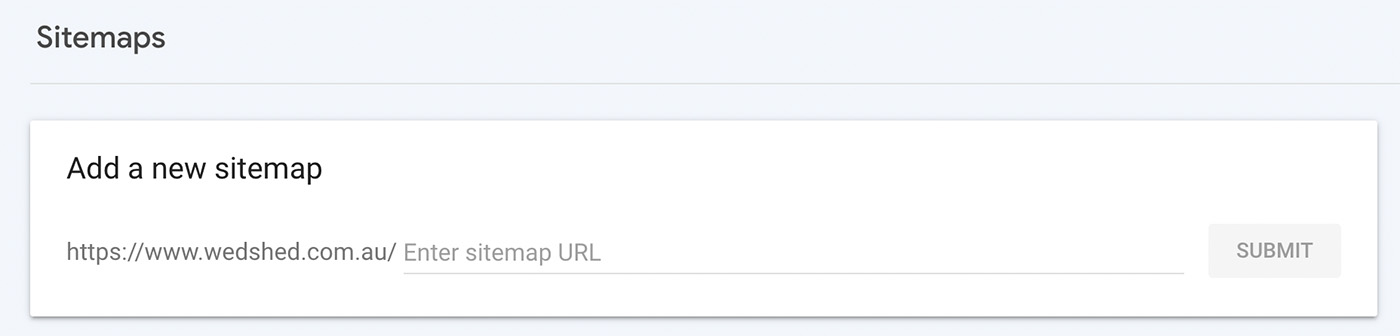

How do I submit a sitemap to Google Search Console?

- On the left hand side navigation in GSC, click on Sitemaps.

- You will see the following where you can provide the location of your XML sitemap.

Google Search Console will process the sitemap once you click “Submit”.

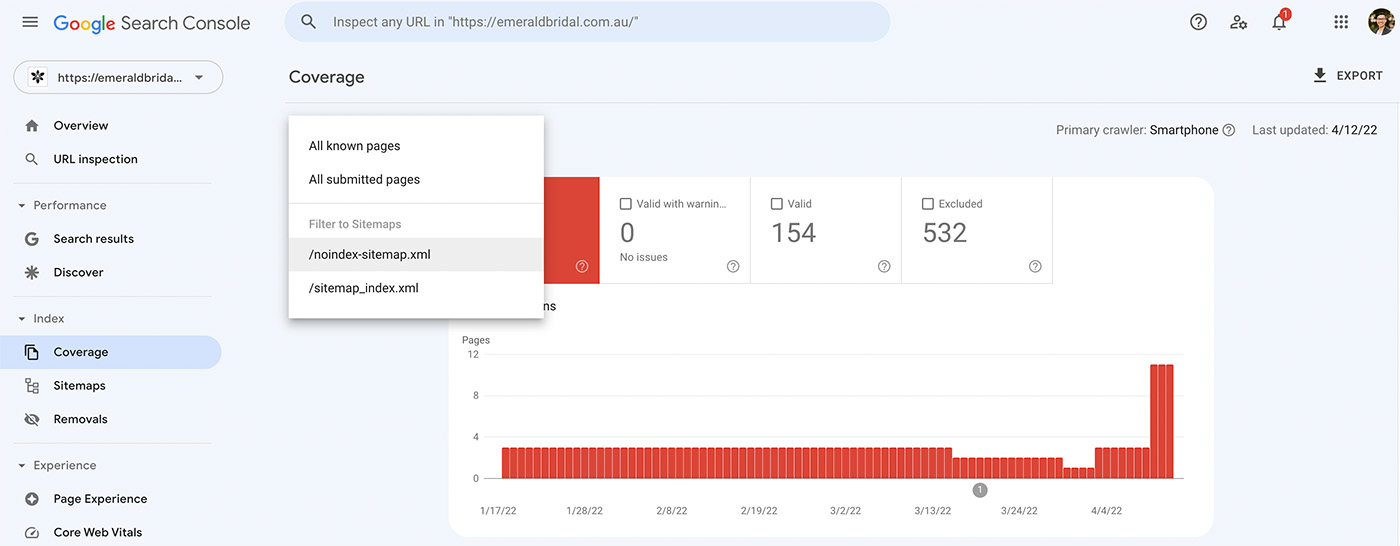

Once GSC has processed and downloaded the sitemap, you will be able to see the index coverage status of the URLs included in the sitemap in the coverage tab.

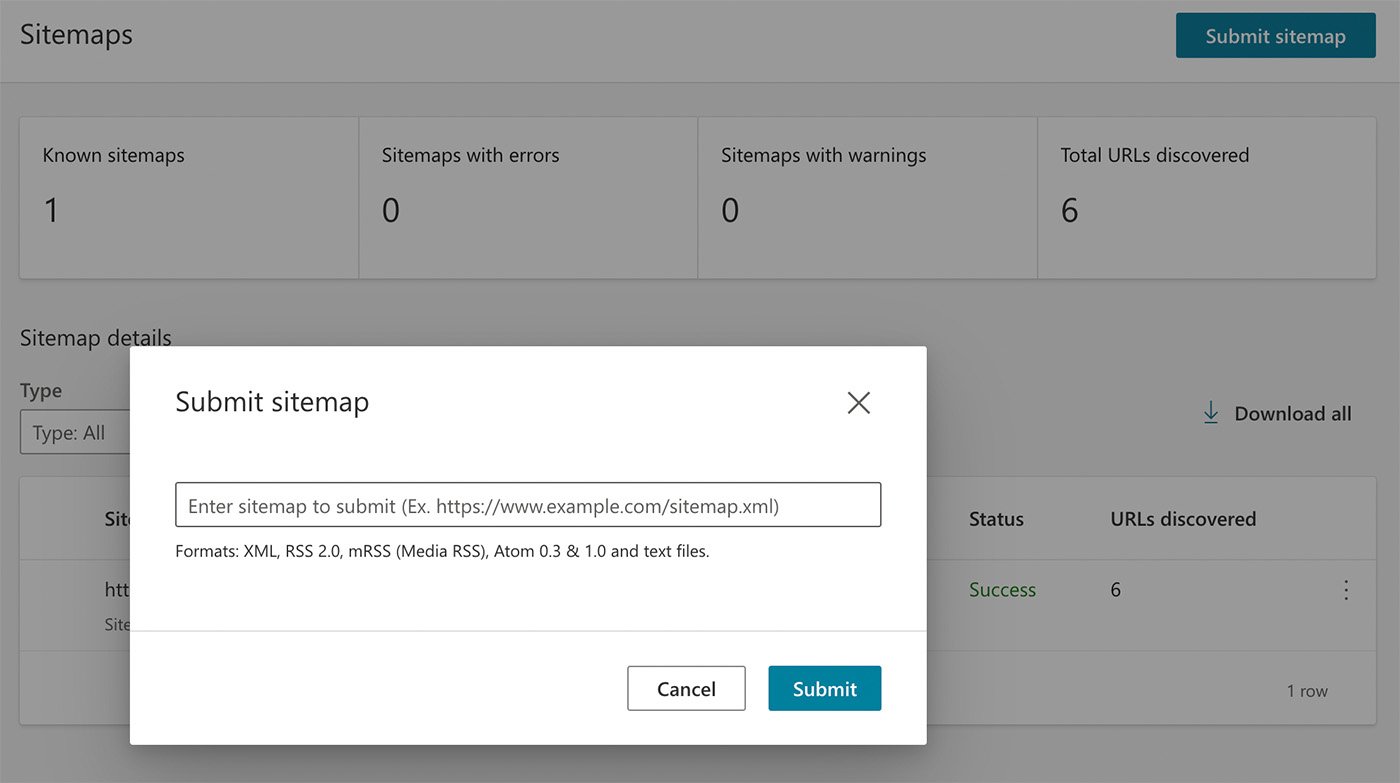

How do I submit a sitemap to Bing Webmaster Tools?

- On the left hand side navigation in Bing Webmaster Tools, click on Sitemaps.

- Click on “Submit sitemap” button in the top right hand corner.

- Enter in the full address of your sitemap URL.

- Click “Submit”.

Should you submit a sitemap index and/or individual sitemap xml files?

You can do both. This is because there is no harm in submitting sitemaps with overlapping URLs. And if you only submit your sitemap index to Google Search Console or Bing Webmaster Tools, they will be able to find the nested sitemaps.

Can I have more than one sitemap?

Yes you can have more than one sitemap and in many cases, having more than one sitemap is recommended.

Most people make the mistake of dumping their sitemap index into Google Search Console and calling it a day. While this practice is not incorrect, you’re not getting the maximum value out of submitting sitemap xml files into Search Console and Webmaster.

Reasons why you’ll want to have more than one sitemap for your website.

You are managing a website with hundreds of thousands of URLs.

The URL limit for a sitemap is 50,000. Therefore, if your website has 500,000 indexable URLs you should separate these into at least 10 sitemap files. Similarly, if your website has 5,000,000 indexable URLs, you will need at least 100 sitemaps.

However, just because there is a hard limit of 50,000 URLs per sitemap does not mean each sitemap needs to contain 50,000 URLs. This is because it takes more resources for a search engine to crawl and index a sitemap of 50,000 URLs compared to a sitemap that has1,000 URLs in it.

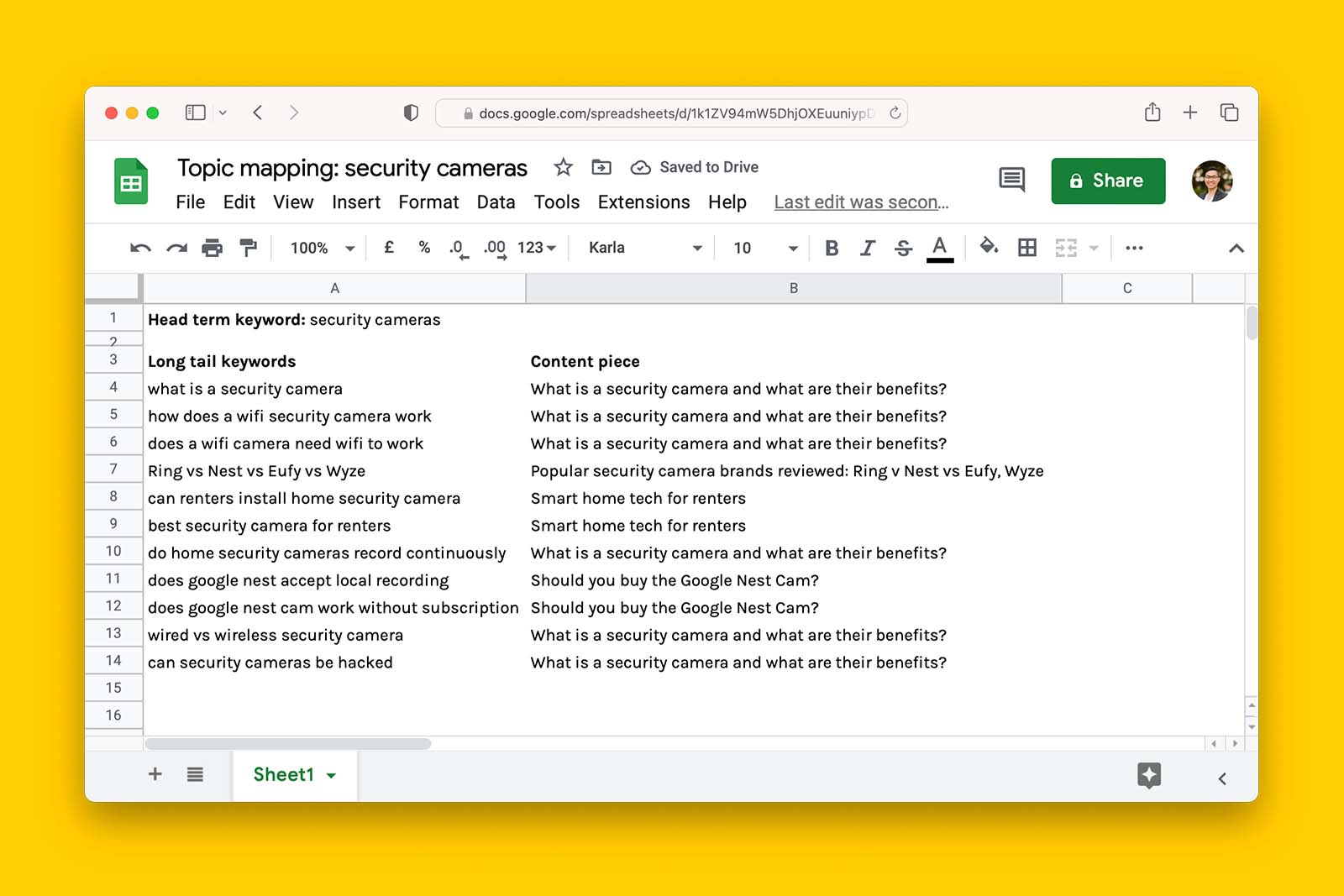

You are managing a website that publishes a lot of URLs across multiple categories and topics.

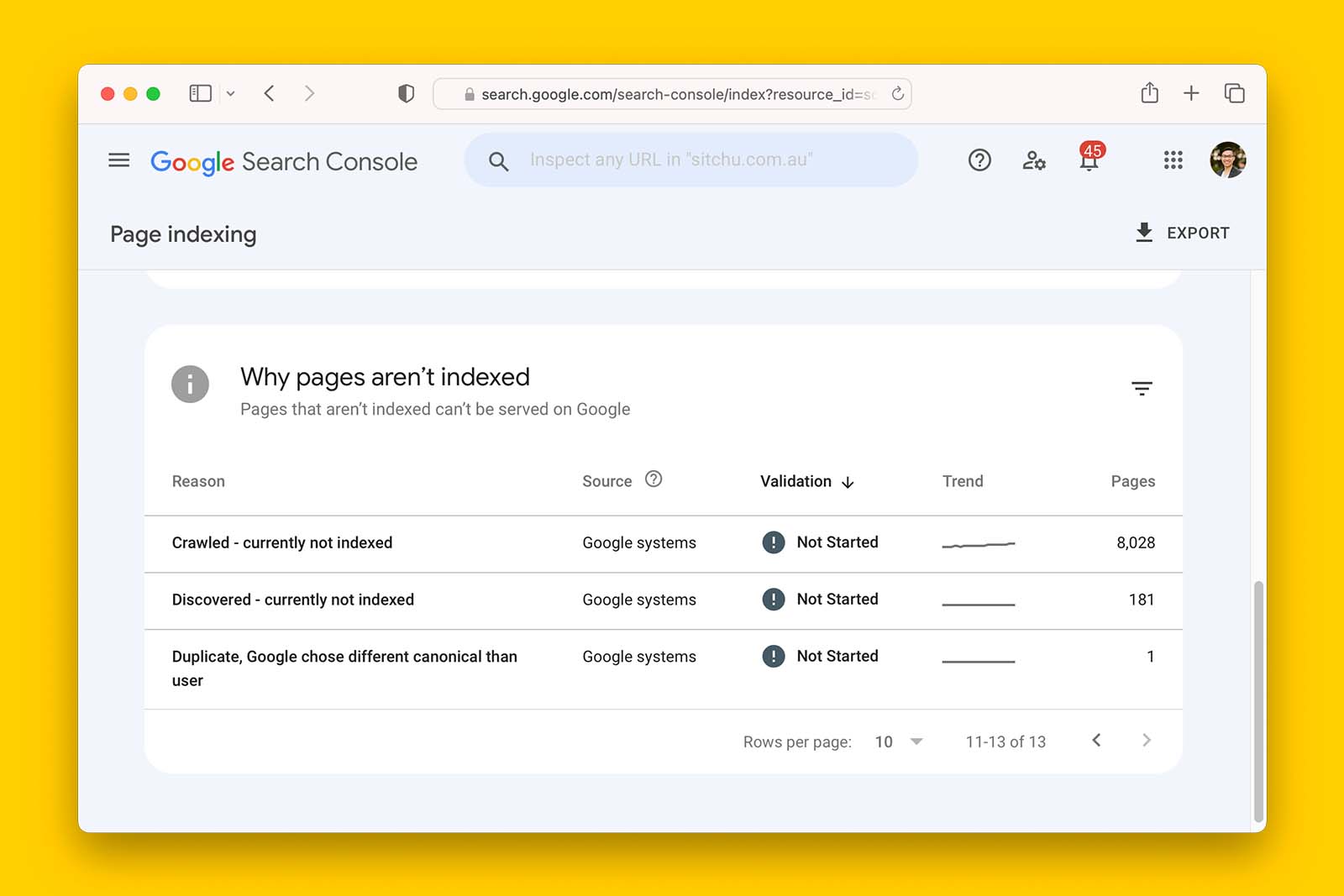

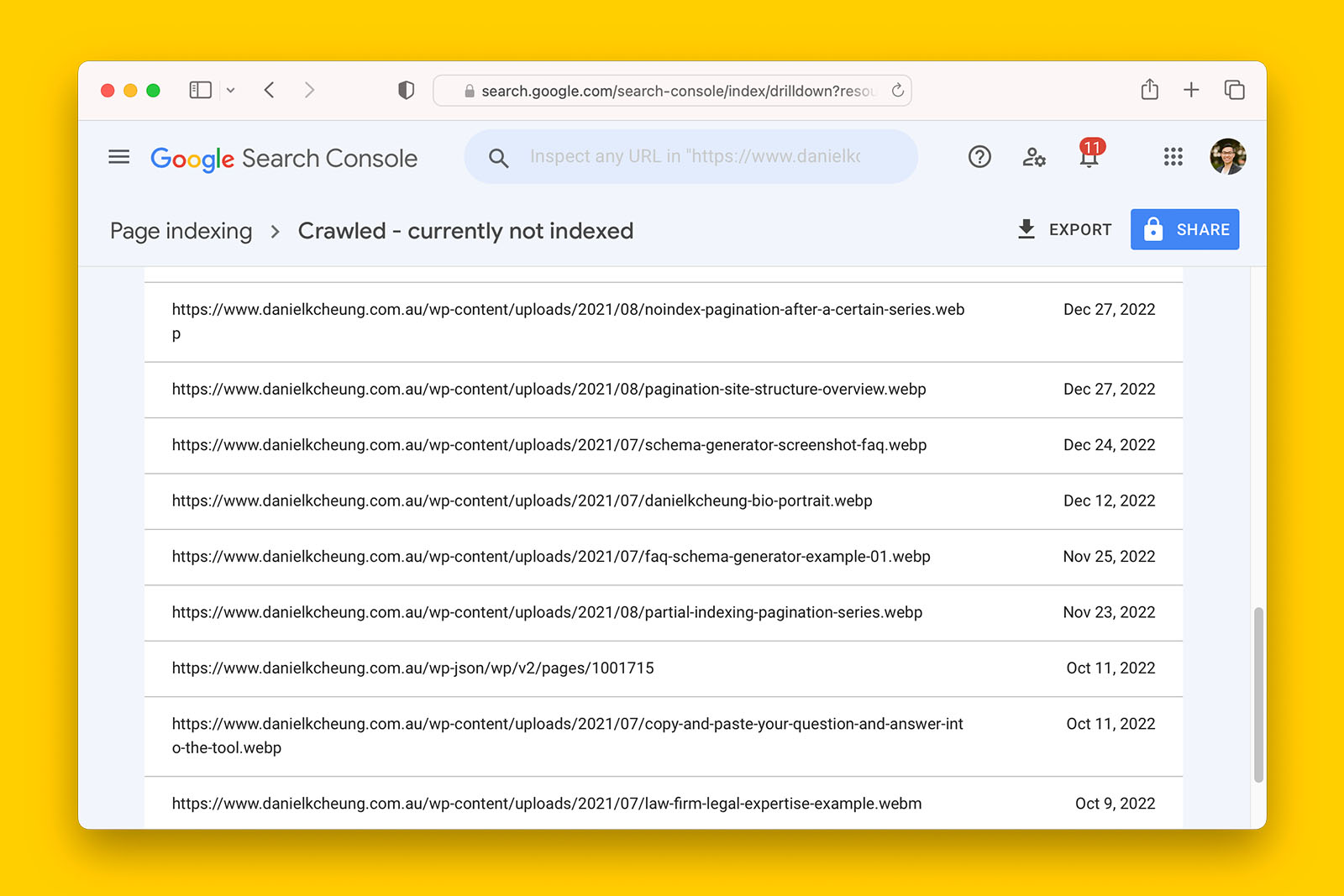

Crawl budget can be an issues for large websites and it is normal for some pages to not be indexed. However, for a website with a million pages, this can add up to tens of thousands of URLs not being indexed. Trying to figure out why this is the case is extremely difficult without multiple sitemaps.

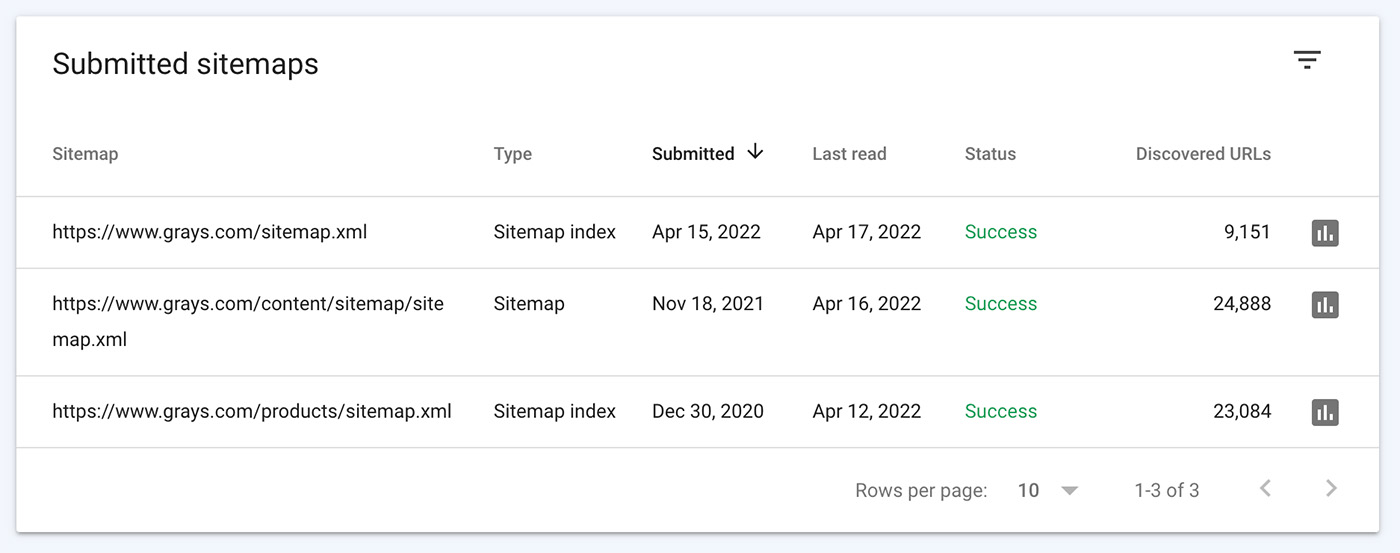

The following screenshot shows that ecommerce marketplace Grays has submitted 2 individual sitemaps to GSC in addition to a sitemap index.

Grays is a website with millions of URLs and it is a prime example of a website that publishes a lot of URLs across multiple categories and topics. They would be better served if they split their content sitemap and product sitemap further. For example, each product category can be its own sitemap and by doing so, it would be easier to analyse for index coverage issues.

You are migrating URLs.

Cross-platform migrations usually involve extensive URL mapping for 301 redirects. For example, moving from Adobe Experience Manager (AEM) across to Shopify Plus will mean significant changes to URL structure.

In these instances where URLs are changing, Google advises you to submit a separate sitemap with new URLs and another sitemap with old URLs that are being redirected away to Google Search Console.

Doing this will allow you to see the following observations in the index coverage tab in Search Console:

- At the initial stage of the migration, the new sitemap will have zero pages indexed while the sitemap containing old URLs will have many pages indexed.

- Over time, as Google crawls the new site and its URLs, the number of indexed URLs in sitemap with the old URLs will drop to zero and the new sitemap will increase in the number of indexed URLs.

See these will provide you with data to show that the migration is progressing well.

You are doing a purge of URLs.

Similar to the migration scenario, it is common for websites to go through content audits and purges.

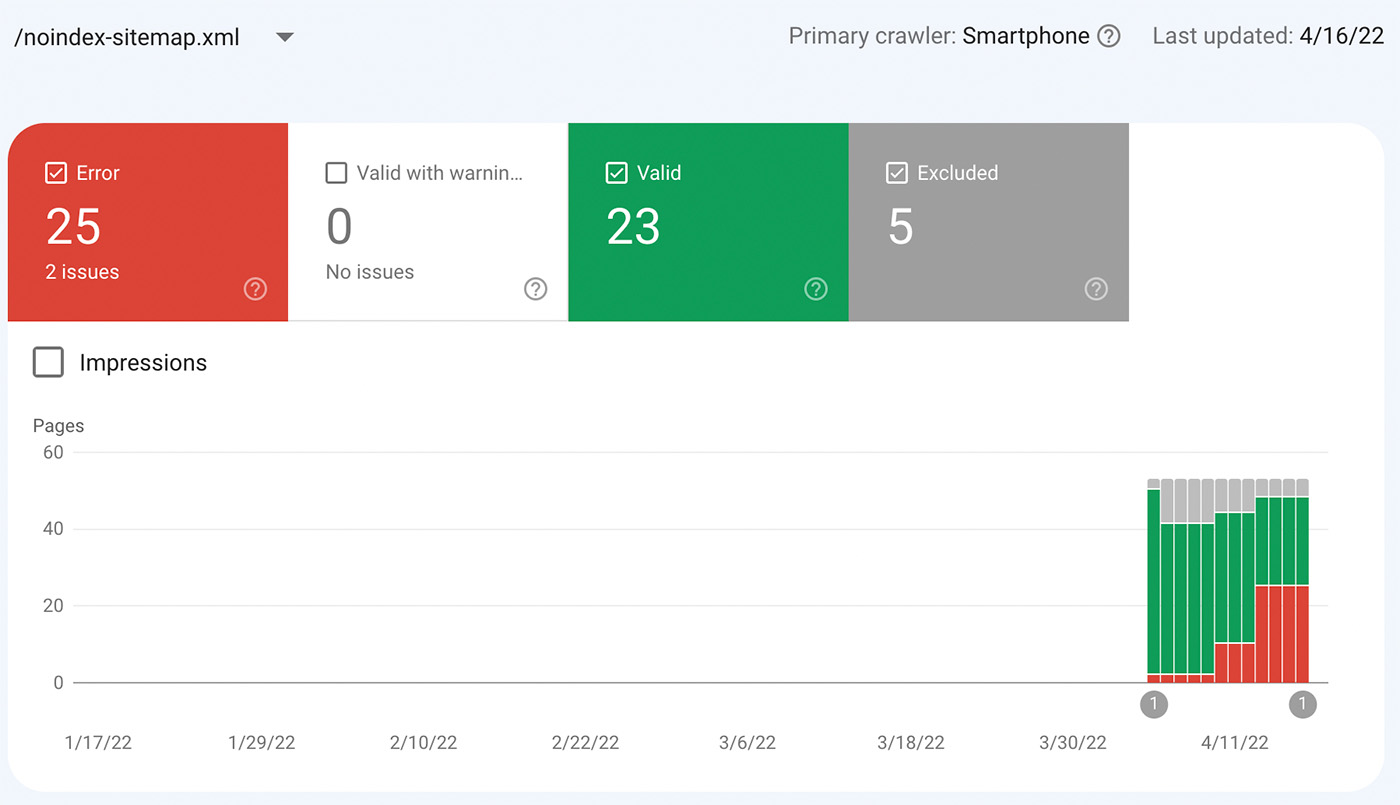

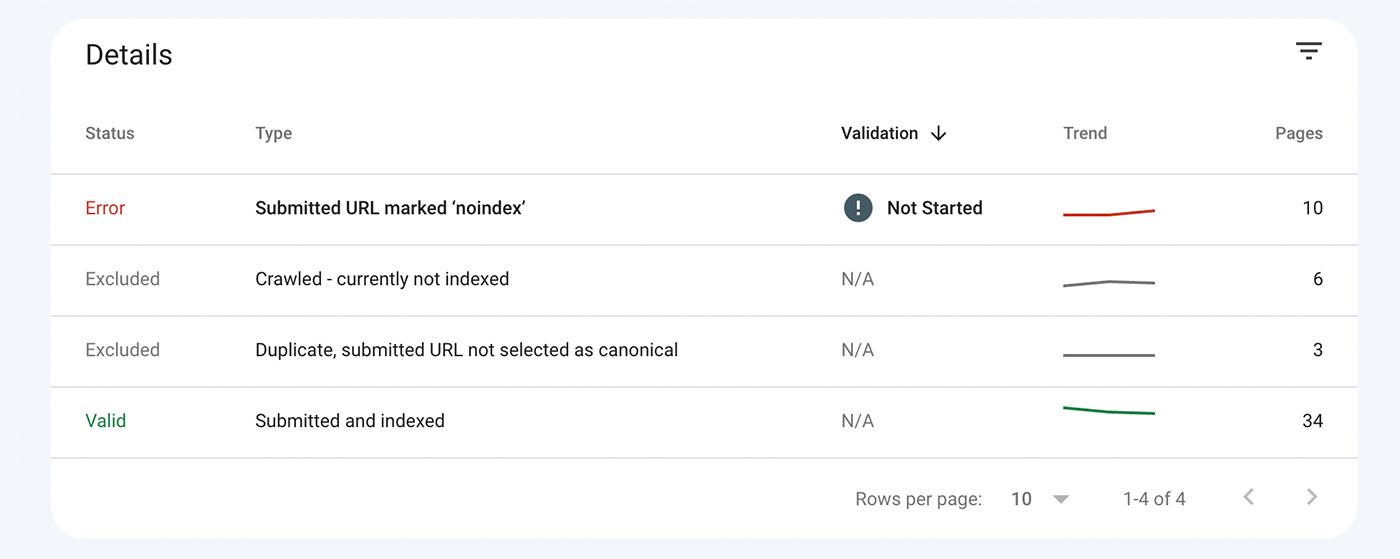

For example, for a small ecommerce site I decided to noindex 53 product detail pages. To test whether Google would discover this change quicker I chose to create and submit to GSC a xml sitemap of all these 53 product URLs.

After a week, the following screenshot shows some signs of progress.

That is, Google has come across some of these URLs and realised that they should be removed from its index. As a result, the number of valid URLs is decreasing and errors and excluded URLs are increasing.

In closing.

Sitemaps are often overlooked tools that can bring a lot of value in the practice and execution of technical SEO.